If somebody known as you claiming to be a authorities official, would you recognize if their voice was actual? This query grew to become frighteningly related this week when a cybercriminal used social engineering and AI to impersonate Secretary of State Marco Rubio, fooling high-level officers with pretend voice messages that sounded precisely like him. It raises a crucial concern: would different world leaders be capable of inform the distinction, or would they fall for it too?

The Rubio Incident: A Wake-Up Name

In June 2025, an unknown attacker created a pretend Sign account utilizing the show title “Marco.Rubio@state.gov” and commenced contacting authorities officers with AI-generated voice messages that completely mimicked the Secretary of State’s voice and writing fashion. The imposter efficiently reached at the very least 5 high-profile targets, together with three overseas ministers, a U.S. governor, and a member of Congress.

The assault wasn’t nearly pranks or publicity. U.S. authorities imagine the wrongdoer was “making an attempt to govern highly effective authorities officers with the purpose of getting access to info or accounts.” This represents a complicated social engineering assault that might have severe nationwide and worldwide safety implications.

Why Voice Scams Are Exploding

The Rubio incident isn’t remoted. In Could, somebody breached the telephone of White Home Chief of Workers Susie Wiles and commenced putting calls and messages to senators, governors and enterprise executives whereas pretending to be Wiles. These assaults have gotten extra widespread as a result of:

- AI voice cloning is now accessible to everybody: What as soon as required Hollywood-level assets can now be accomplished with free on-line instruments

- Social media gives voice samples: Only a few seconds of somebody’s voice from a video or podcast is sufficient

- Folks belief acquainted voices: We’re psychologically wired to belief voices we acknowledge

- Excessive-value targets are in every single place: From authorities officers to your personal members of the family

It’s Not Simply Politicians – No one is Immune

Whereas the Rubio case concerned authorities officers, these similar strategies are getting used in opposition to on a regular basis People. A latest McAfee research discovered that 59% of People say they or somebody they know has fallen for a web based rip-off within the final 12 months, with rip-off victims shedding a mean of $1,471. In 2024, our analysis revealed that 1 in 3 individuals imagine they’ve skilled some form of AI voice rip-off

A number of the most devastating are “grandparent scams” the place criminals clone a grandchild’s voice to trick aged family members into sending cash for pretend emergencies. Deepfake rip-off victims have reported losses starting from $250 to over half 1,000,000 {dollars}.

Frequent AI voice rip-off situations:

- Household emergency calls: “Grandma, I’m in jail and wish bail cash”

- CEO fraud: Faux executives asking staff to switch cash

- Funding scams: Celebrities showing to endorse get-rich-quick schemes

- Romance scams: Constructing pretend relationships utilizing stolen voices

From Mission Not possible to Mission Impersonated

One massive motive deepfake scams are exploding? The instruments are low cost, highly effective, and extremely simple to make use of. McAfee Labs examined 17 deepfake mills and located many can be found on-line without spending a dime or with low-cost trials. Some are marketed as “leisure” — made for prank calls or spoofing superstar voices on apps like WhatsApp. However others are clearly constructed with scams in thoughts, providing life like impersonations with only a few clicks.

Not way back, making a convincing deepfake took consultants days and even weeks. Now? It could actually value lower than a latte and take much less time to make than it takes to drink one. Easy drag-and-drop interfaces imply anybody — even with zero technical expertise – can clone voices or faces.

Much more regarding: open-source libraries present free tutorials and pre-trained fashions, serving to scammers skip the laborious elements completely. Whereas a number of the extra superior instruments require a robust pc and graphics card, a good setup prices underneath $1,000, a tiny price ticket when you think about the payoff.

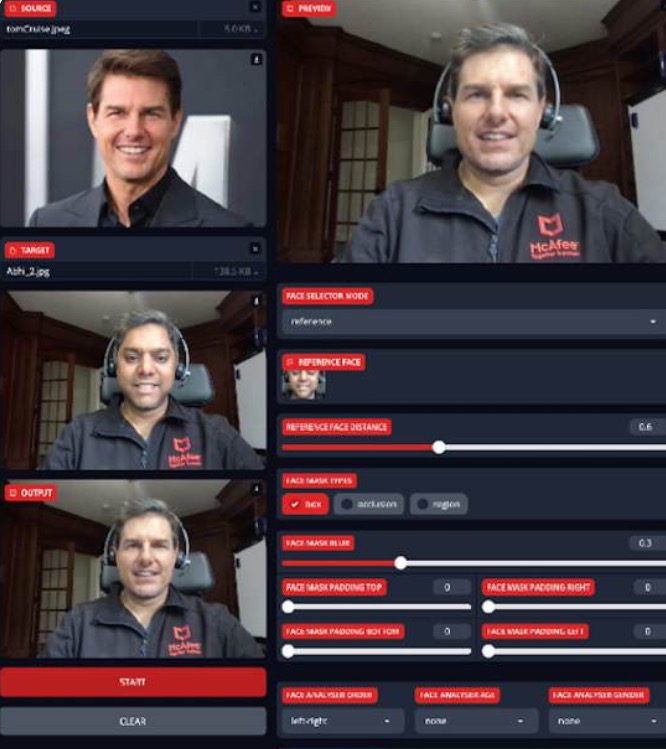

Globally, 87% of rip-off victims lose cash, and 1 in 5 lose over $1,000. Only a handful of profitable scams can simply pay for a scammer’s gear after which some. In a single McAfee check, for simply $5 and 10 minutes of setup time, we created a real-time avatar that made us look and sound like Tom Cruise. Sure, it’s that simple — and that harmful.

Determine 1. Demonstrating the creation of a extremely convincing deepfake

Combating Again: How McAfee’s Deepfake Detector Works

Recognizing the pressing want for defense, McAfee developed Deepfake Detector to battle AI-powered scams. McAfee’s Deepfake Detector represents one of the superior client instruments out there immediately.

Key Options That Defend You

- Close to-Prompt Detection: McAfee Deepfake Detector makes use of superior AI to provide you with a warning inside seconds if a video has AI-generated audio, serving to you shortly determine actual vs. pretend content material in your browser.

- Privateness-First Design: Your entire identification course of happens straight in your PC, maximizing on-device processing to maintain non-public consumer knowledge off the cloud. McAfee doesn’t acquire or document a consumer’s audio in any manner.

- Superior AI Know-how: McAfee’s AI detection fashions leverage transformer-based Deep Neural Community (DNN) fashions with a 96% accuracy fee.

- Seamless Integration: Deepfake Detector spots deepfakes for you proper in your browser, with none further clicks.

How It Would Have Helped within the Rubio Case

Whereas McAfee’s Deepfake Detector is constructed to determine manipulated audio inside movies, it factors to the form of expertise that’s turning into important in conditions like this. If the impersonation try had taken the type of a video message posted or shared on-line, Deepfake Detector might have:

- Analyzed the video’s audio inside seconds

- Flagged indicators of AI-generated voice content material

- Alerted the viewer that the message is likely to be artificial

- Helped forestall confusion or hurt by prompting further scrutiny

Our expertise makes use of superior AI detection strategies — together with transformer-based deep neural networks — to assist customers discern what’s actual from what’s pretend in immediately’s period of AI-driven deception.

Whereas the consumer-facing model of our expertise doesn’t at the moment scan audio-only content material like telephone calls or voice messages, the Rubio case exhibits why AI detection instruments like ours are extra crucial than ever — particularly as threats evolve throughout video, audio, and past – and why it’s essential for the cybersecurity trade to proceed evolving on the velocity of AI.

How To Defend Your self: Sensible Steps

Whereas expertise like McAfee’s Deepfake Detector gives highly effective safety, you must also:

- Be Skeptical of “Pressing Requests”

- Belief and confirm id by means of different channels

- Ask questions solely the true individual would know, utilizing secret phrases or protected phrases

- Be cautious of requests for cash or delicate info

- Pause if the message stirs robust emotion — concern, panic, urgency — and ask your self, would this individual actually say that

The Way forward for Voice Safety

The Rubio incident exhibits that nobody is proof against AI voice scams. It additionally demonstrates why proactive detection expertise is turning into important. Information is energy, and this has by no means been more true than in immediately’s AI-driven world.

The race between AI-powered scams and AI-powered safety is intensifying. By staying knowledgeable, utilizing superior detection instruments, and sustaining wholesome skepticism, we are able to keep one step forward of cybercriminals who’re making an attempt to actually steal our voices, and our belief.