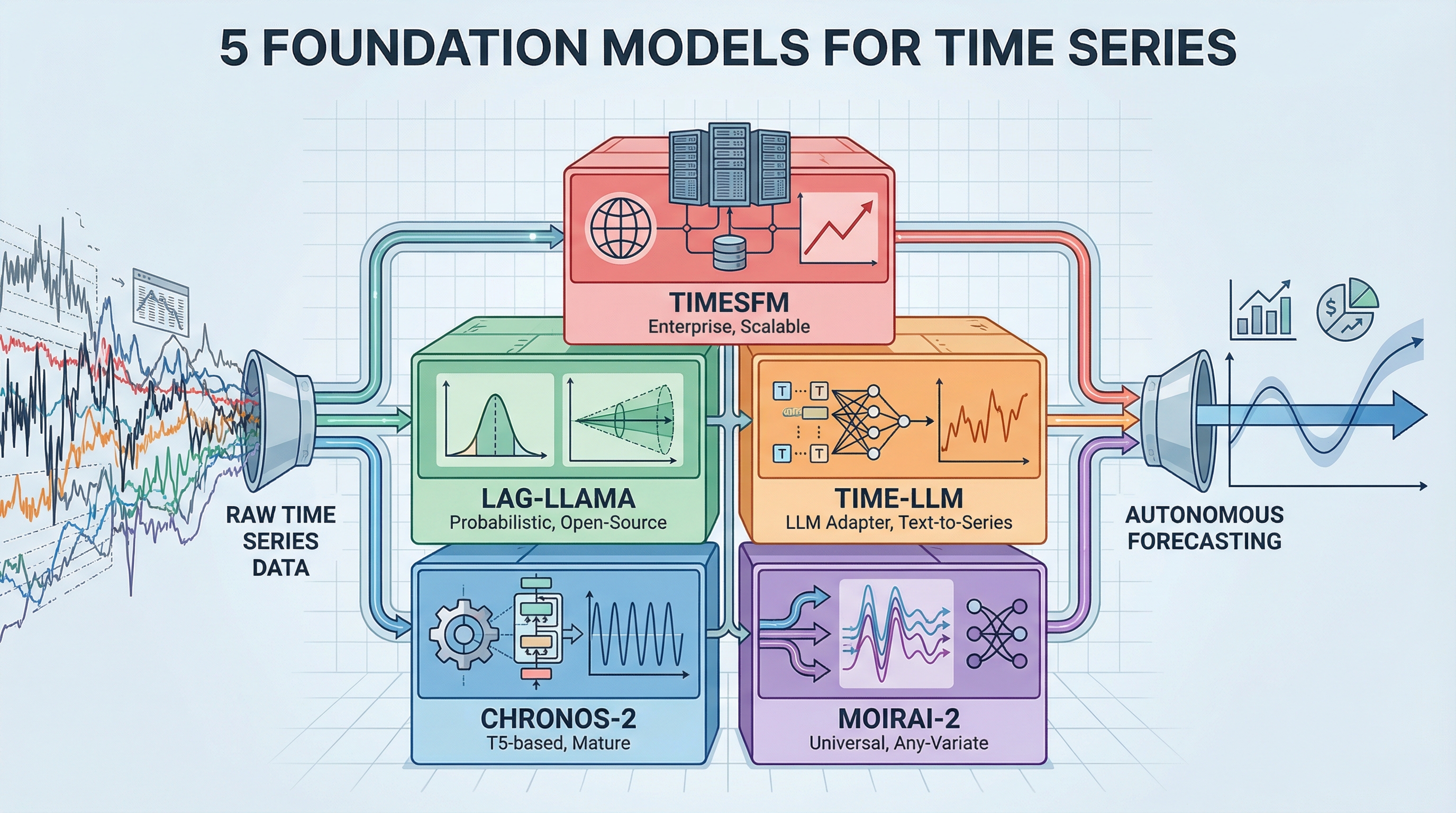

The 2026 Time Sequence Toolkit: 5 Basis Fashions for Autonomous Forecasting

Picture by Creator

Introduction

Most forecasting work includes constructing customized fashions for every dataset — match an ARIMA right here, tune an LSTM there, wrestle with Prophet‘s hyperparameters. Basis fashions flip this round. They’re pretrained on large quantities of time sequence information and may forecast new patterns with out further coaching, just like how GPT can write about matters it’s by no means explicitly seen. This listing covers the 5 important basis fashions it’s essential to know for constructing manufacturing forecasting methods in 2026.

The shift from task-specific fashions to basis mannequin orchestration modifications how groups strategy forecasting. As an alternative of spending weeks tuning parameters and wrangling area experience for every new dataset, pretrained fashions already perceive common temporal patterns. Groups get quicker deployment, higher generalization throughout domains, and decrease computational prices with out in depth machine studying infrastructure.

1. Amazon Chronos-2 (The Manufacturing-Prepared Basis)

Amazon Chronos-2 is essentially the most mature possibility for groups transferring to basis mannequin forecasting. This household of pretrained transformer fashions, primarily based on the T5 structure, tokenizes time sequence values by way of scaling and quantization — treating forecasting as a language modeling activity. The October 2025 launch expanded capabilities to assist univariate, multivariate, and covariate-informed forecasting.

The mannequin delivers state-of-the-art zero-shot forecasting that persistently beats tuned statistical fashions out of the field, processing 300+ forecasts per second on a single GPU. With hundreds of thousands of downloads on Hugging Face and native integration with AWS instruments like SageMaker and AutoGluon, Chronos-2 has the strongest documentation and neighborhood assist amongst basis fashions. The structure is available in 5 sizes, from 9 million to 710 million parameters, so groups can stability efficiency towards computational constraints. Take a look at the implementation on GitHub, overview the technical strategy within the analysis paper, or seize pretrained fashions from Hugging Face.

2. Salesforce MOIRAI-2 (The Common Forecaster)

Salesforce MOIRAI-2 tackles the sensible problem of dealing with messy, real-world time sequence information by way of its common forecasting structure. This decoder-only transformer basis mannequin adapts to any information frequency, any variety of variables, and any prediction size inside a single framework. The mannequin’s “Any-Variate Consideration” mechanism dynamically adjusts to multivariate time sequence with out requiring fastened enter dimensions, setting it other than fashions designed for particular information buildings.

MOIRAI-2 ranks extremely on the GIFT-Eval leaderboard amongst non-data-leaking fashions, with sturdy efficiency on each in-distribution and zero-shot duties. Coaching on the LOTSA dataset — 27 billion observations throughout 9 domains — provides the mannequin sturdy generalization to new forecasting eventualities. Groups profit from absolutely open-source improvement with energetic upkeep, making it invaluable for advanced, real-world functions involving a number of variables and irregular frequencies. The venture’s GitHub repository contains implementation particulars, whereas the technical paper and Salesforce weblog submit clarify the common forecasting strategy. Pretrained fashions are on Hugging Face.

3. Lag-Llama (The Open-Supply Spine)

Lag-Llama brings probabilistic forecasting capabilities to basis fashions by way of a decoder-only transformer impressed by Meta’s LLaMA structure. In contrast to fashions that produce solely level forecasts, Lag-Llama generates full likelihood distributions with uncertainty intervals for every prediction step — the quantified uncertainty that decision-making processes want. The mannequin makes use of lagged options as covariates and reveals sturdy few-shot studying when fine-tuned on small datasets.

The absolutely open-source nature with permissive licensing makes Lag-Llama accessible to groups of any dimension, whereas its skill to run on CPU or GPU removes infrastructure boundaries. Tutorial backing by way of publications at main machine studying conferences provides validation. For groups prioritizing transparency, reproducibility, and probabilistic outputs over uncooked efficiency metrics, Lag-Llama provides a dependable basis mannequin spine. The GitHub repository accommodates implementation code, and the analysis paper particulars the probabilistic forecasting methodology.

4. Time-LLM (The LLM Adapter)

Time-LLM takes a distinct strategy by changing current giant language fashions into forecasting methods with out modifying the unique mannequin weights. This reprogramming framework interprets time sequence patches into textual content prototypes, letting frozen LLMs like GPT-2, LLaMA, or BERT perceive temporal patterns. The “Immediate-as-Prefix” approach injects area information by way of pure language, so groups can use their current language mannequin infrastructure for forecasting duties.

This adapter strategy works effectively for organizations already working LLMs in manufacturing, because it eliminates the necessity to deploy and preserve separate forecasting fashions. The framework helps a number of spine fashions, making it simple to modify between completely different LLMs as newer variations turn out to be accessible. Time-LLM represents the “agentic AI” strategy to forecasting, the place general-purpose language understanding capabilities switch to temporal sample recognition. Entry the implementation by way of the GitHub repository, or overview the methodology within the analysis paper.

5. Google TimesFM (The Massive Tech Customary)

Google TimesFM gives enterprise-grade basis mannequin forecasting backed by one of many largest expertise analysis organizations. This patch-based decoder-only mannequin, pretrained on 100 billion real-world time factors from Google’s inner datasets, delivers sturdy zero-shot efficiency throughout a number of domains with minimal configuration. The mannequin design prioritizes manufacturing deployment at scale, reflecting its origins in Google’s inner forecasting workloads.

TimesFM is battle-tested by way of in depth use in Google’s manufacturing environments, which builds confidence for groups deploying basis fashions in enterprise eventualities. The mannequin balances efficiency and effectivity, avoiding the computational overhead of bigger options whereas sustaining aggressive accuracy. Ongoing assist from Google Analysis means continued improvement and upkeep, making TimesFM a dependable alternative for groups searching for enterprise-grade basis mannequin capabilities. Entry the mannequin by way of the GitHub repository, overview the structure within the technical paper, or learn the implementation particulars within the Google Analysis weblog submit.

Conclusion

Basis fashions remodel time sequence forecasting from a mannequin coaching downside right into a mannequin choice problem. Chronos-2 provides manufacturing maturity, MOIRAI-2 handles advanced multivariate information, Lag-Llama gives probabilistic outputs, Time-LLM leverages current LLM infrastructure, and TimesFM delivers enterprise reliability. Consider fashions primarily based in your particular wants round uncertainty quantification, multivariate assist, infrastructure constraints, and deployment scale. Begin with zero-shot analysis on consultant datasets to establish which basis mannequin matches your forecasting wants earlier than investing in fine-tuning or customized improvement.