As malicious deepfakes proceed to flood our screens with disinformation throughout this election yr, we’ve launched our 2024 Election AI Toolkit to assist voters shield themselves and their vote.

Our personal analysis reveals simply how deep the issue runs. Greater than six in ten (63%) of Individuals mentioned they’ve seen a deepfake up to now 60 days. As for the influence of these deepfakes, almost half (48%) who’ve seen one mentioned it’s influenced who they’ll vote for within the upcoming election.

In all, we discovered that 91% of Individuals mentioned they’re involved that AI-generated disinformation may intrude with public notion of candidates, their platforms, and even election outcomes.

The explosive rise of disinformation, powered by AI instruments

Disinformation has performed an extended and shady position in politics. For a while now. George Washington fell sufferer to it in 1777 when cast letters painted him as a British sympathizer — disinformation that adopted him to the primary presidency. [i]

And it’s appeared on the web for a while too. For years, creating disinformation on the web referred to as for loads of guide labor. Writers, designers, and builders all put hours into writing, creating photos, and creating websites for spreading disinformation. Now, it takes simply one individual mere minutes. The creation of low-cost and free AI instruments has put disinformation into overdrive.

We’ve seen an explosive rise in malicious deepfakes within the run-up to Election Day.

- In simply the primary three months of 2024, the quantity of deepfakes within the U.S. surged by 303% in comparison with the beginning of 2023. [ii]

- Faux information websites loaded with AI-generated pictures and articles have grown from an estimated 49 websites in Might 2023 to greater than 700 in February 2024. [iii]

- Comply with-up analysis pushes the estimated variety of AI-powered pretend information websites but larger. In June, analysts found 1,265 websites concentrating on U.S. web customers with pretend information – many posing as “native” information shops. That determine surpasses the variety of native newspapers nonetheless operating within the U.S., at simply 1,213 shops. [iv]

- A British nonprofit devoted to combating hate speech and extremism on-line discovered that AI-generated disinformation has been rising by a median of 130% per thirty days on X (previously Twitter) over the previous yr. [v]

With polling in some states already underway, we are able to anticipate the glut of malicious deepfakes to proceed. They could:

- Goal politicians and their households, utilizing deepfakes that solid them in a foul gentle.

- Create different deepfakes that soften the picture of a candidate, referred to as softfakes, to make them appear extra interesting.

- Unfold phony polling information that forestalls voters from attending to polling locations in a well timed means — or in any respect.

- Use deepfakes to skew polling outcomes, all with the intention of influencing voters.

- Use different deepfakes to undermine confidence within the electoral course of.

With that, it’s little shock that just about 60% of Individuals say that they’re extraordinarily or very involved about AI’s affect on the election.[vi] Deepfakes have merely grow to be pervasive.

Disinformation isn’t new. Utilizing the facility of AI to unfold it’s.

AI has given new life to the outdated downside of disinformation and faux information. In some ways, it’s supercharged it.

It’s achieved so in two main methods:

- Bogus articles and doctored pictures as soon as took effort and time to prepare dinner up. Now, they take seconds.

- AI instruments can successfully clone voices and other people to create convincing-looking deepfakes in digital kind.

In all, it’s simpler, cheaper, and faster than ever to create malicious deepfakes with AI instruments. On high of that, the picture and sound high quality of deepfakes continues to enhance. In all, it’s solely getting harder when it’s time to inform the distinction between what’s actual and what’s pretend.

Taken collectively, this has put voters in a lurch. Who and what can they belief on-line?

You’re not powerless within the face of malicious AI. Fairly the alternative.

Even because the creators of malicious AI-generated content material have gotten cagier of their methods, their work nonetheless offers off indicators of a pretend. Nonetheless, recognizing this malicious content material requires further effort on everybody’s half when getting their information or scrolling their feeds on-line. Which means scrutinizing what we eat and counting on trusted fact-checking assets to get on the reality. It additionally means utilizing AI as any ally, with AI instruments that detect AI deepfakes in actual time.

Our Election Yr Toolkit will enable you to do exactly that. It covers the fundamentals of pretend information and malicious AI deepfakes, how one can spot them, and extra. As you’ll see, it’s a subject each broad and deep, and we discover it in a step-by-step means that helps make sense of all of it for voters.

Our position within the struggle towards malicious deepfakes.

Sharing information about AI with voters is one in every of a number of steps we’ve taken to struggle towards malicious deepfakes.

In a first-of-its-kind collaboration, we’ve teamed up with Yahoo Information to bolster the credibility of photos on the Yahoo Information platform. This collaboration integrates McAfee’s refined deepfake picture detection know-how into Yahoo Information’s content material high quality system, providing readers an added layer of belief.

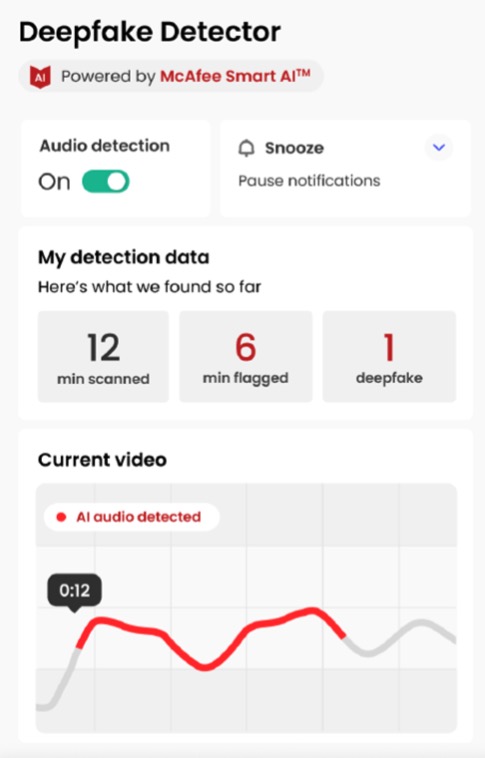

And we’re rolling out our McAfee Deepfake detector by way of our companions too. It checks audio being performed by way of your browser to determine if the content material you’re watching or listening to incorporates AI-generated audio. When AI audio is detected, customers are notified in seconds.

Dangerous actors put a completely new spin on disinformation with malicious deepfakes.

AI makes disinformation look and sound way more credible than ever. And unhealthy actors can produce it on an amazing scale, due to the benefit and velocity of AI instruments. In an election yr that requires extra scrutiny on our collective half — and our 2024 Election AI Toolkit will help. It covers how one can spot a deepfake, how they unfold, and a number of other fact-checking assets that you would be able to depend on when that bit of stories you stumble throughout appears slightly sketchy.

Obtain the complete McAfee AI Election Toolkit right here

References

[i] https://www.politifact.com/article/2022/feb/21/when-george-washington-fought-misinformation/

[v] https://techcrunch.com/2024/03/06/political-deepfakes-are-spreading-like-wildfire-thanks-to-genai/