With iOS 26, Apple introduces the Basis Fashions framework, a privacy-first, on-device AI toolkit that brings the identical language fashions behind Apple Intelligence proper into your apps. This framework is out there throughout Apple platforms, together with iOS, macOS, iPadOS, and visionOS, and it offers builders with a streamlined Swift API for integrating superior AI options immediately into your apps.

Not like cloud-based LLMs akin to ChatGPT or Claude, which run on highly effective servers and require web entry, Apple’s LLM is designed to run fully on-device. This architectural distinction offers it a singular benefit: all knowledge stays on the consumer’s gadget, making certain privateness, decrease latency, and offline entry.

This framework opens the door to a complete vary of clever options you may construct proper out of the field. You’ll be able to generate and summarize content material, classify data, and even construct in semantic search and personalised studying experiences. Whether or not you need to create a wise in-app information, generate distinctive content material for every consumer, or add a conversational assistant, now you can do it with just some strains of Swift code.

On this tutorial, we’ll discover the Basis Fashions framework. You’ll study what it’s, the way it works, and how you can use it to generate content material utilizing Apple’s on-device language fashions.

To observe alongside, be sure to have Xcode 26 put in, and that your Mac is working macOS Tahoe, which is required to entry the Basis Fashions framework.

Able to get began? Let’s dive in.

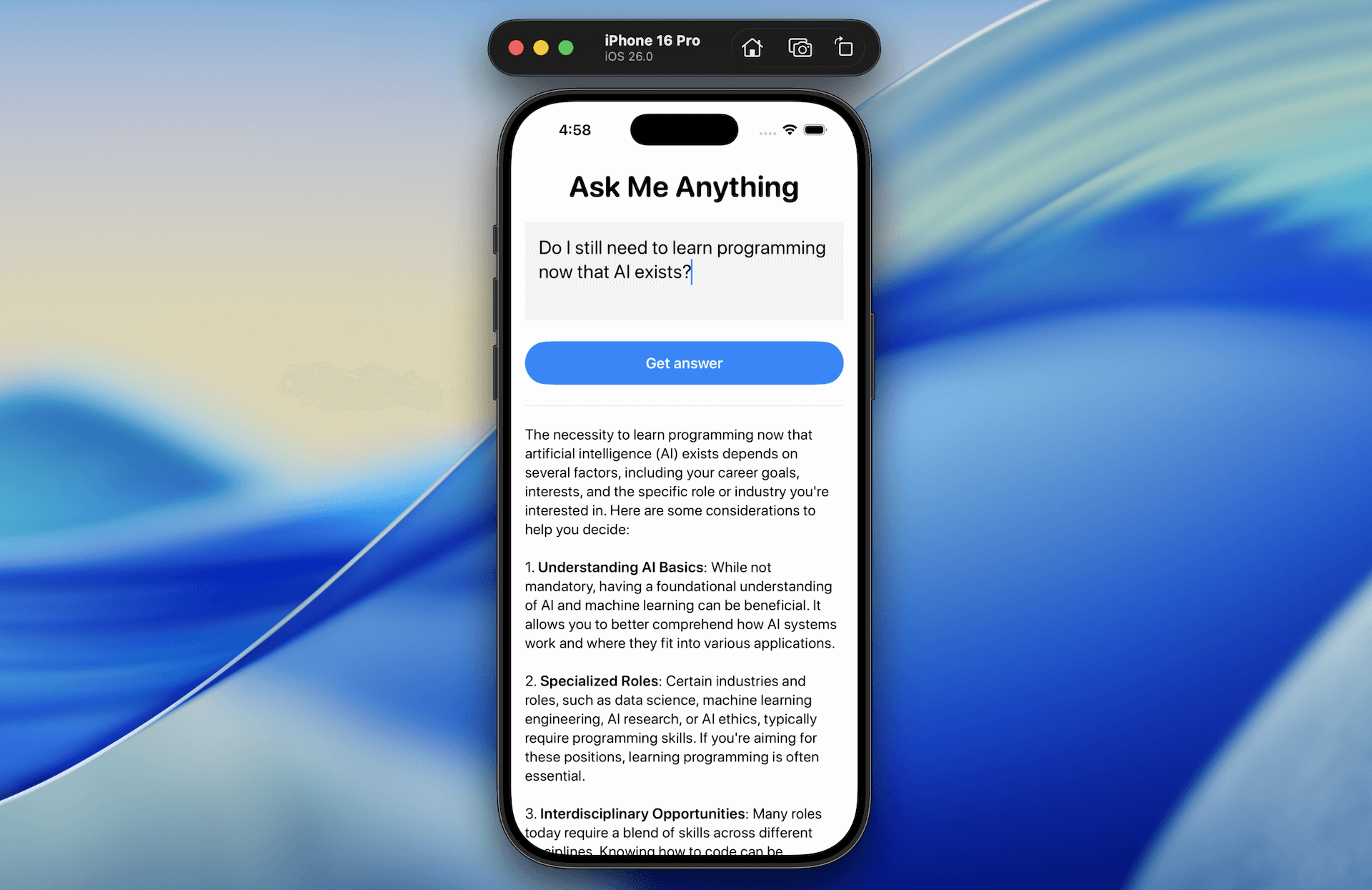

The Demo App: Ask Me Something

It’s at all times nice to study new frameworks or APIs by constructing a demo app — and that’s precisely what we’ll do on this tutorial. We’ll create a easy but highly effective app referred to as Ask Me Something to discover how Apple’s new Basis Fashions framework works in iOS 26.

The app lets customers sort in any questions and offers an AI-generated response, all processed on-device utilizing Apple’s built-in LLM.

By constructing this demo app, you will learn to combine the Basis Fashions framework right into a SwiftUI app. You will additionally perceive how you can create prompts and seize each full and partial generated responses.

Utilizing the Default System Language Mannequin

Apple offers a built-in mannequin referred to as SystemLanguageModel, which provides you entry to the on-device basis mannequin that powers Apple Intelligence. For general-purpose use, you may entry the base model of this mannequin through the default property. It’s optimized for textual content technology duties and serves as an awesome place to begin for constructing options like content material technology or query answering in your app.

To make use of it in your app, you will first must import the FoundationModels framework:

import FoundationModels

With the framework now imported, you may get a deal with on the default system language mannequin. Right here’s the pattern code to do this:

struct ContentView: View {

non-public var mannequin = SystemLanguageModel.default

var physique: some View {

change mannequin.availability {

case .obtainable:

mainView

case .unavailable(let cause):

Textual content(unavailableMessage(cause))

}

}

non-public var mainView: some View {

ScrollView {

.

.

.

}

}

non-public func unavailableMessage(_ cause: SystemLanguageModel.Availability.UnavailableReason) -> String {

change cause {

case .deviceNotEligible:

return "The gadget just isn't eligible for utilizing Apple Intelligence."

case .appleIntelligenceNotEnabled:

return "Apple Intelligence just isn't enabled on this gadget."

case .modelNotReady:

return "The mannequin is not prepared as a result of it is downloading or due to different system causes."

@unknown default:

return "The mannequin is unavailable for an unknown cause."

}

}

}

Since Basis Fashions solely work on gadgets with Apple Intelligence enabled, it is necessary to confirm {that a} mannequin is out there earlier than utilizing it. You’ll be able to examine its readiness by inspecting the availability property.

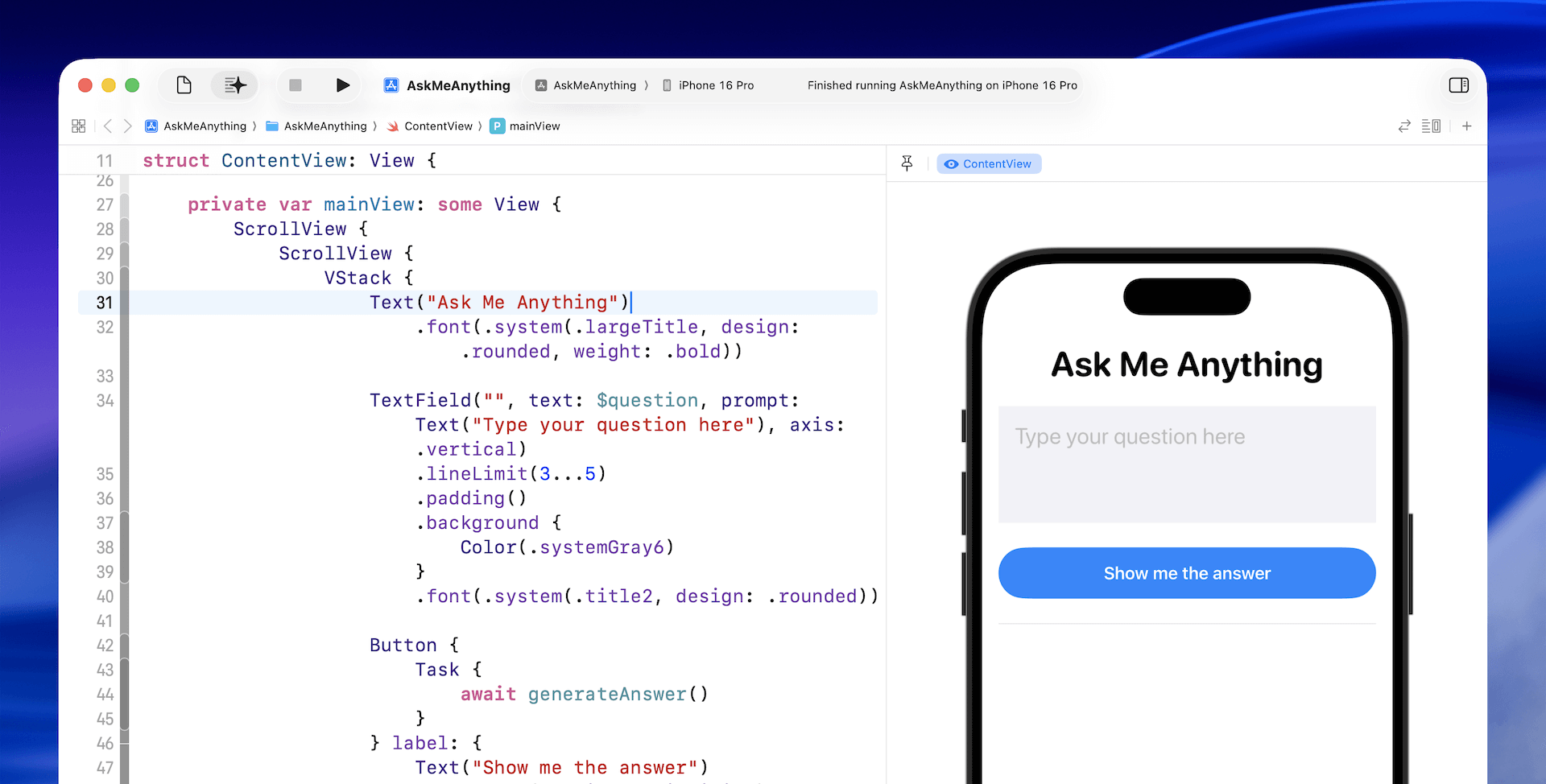

Implementing the UI

Let’s proceed to construct the UI of the mainView. We first add two state variables to retailer the consumer query and the generated reply:

@State non-public var reply: String = ""

@State non-public var query: String = ""

For the UI implementation, replace the mainView like this:

non-public var mainView: some View {

ScrollView {

ScrollView {

VStack {

Textual content("Ask Me Something")

.font(.system(.largeTitle, design: .rounded, weight: .daring))

TextField("", textual content: $query, immediate: Textual content("Kind your query right here"), axis: .vertical)

.lineLimit(3...5)

.padding()

.background {

Shade(.systemGray6)

}

.font(.system(.title2, design: .rounded))

Button {

} label: {

Textual content("Get reply")

.body(maxWidth: .infinity)

.font(.headline)

}

.buttonStyle(.borderedProminent)

.controlSize(.extraLarge)

.padding(.high)

Rectangle()

.body(top: 1)

.foregroundColor(Shade(.systemGray5))

.padding(.vertical)

Textual content(LocalizedStringKey(reply))

.font(.system(.physique, design: .rounded))

}

.padding()

}

}

}

The implementation is fairly easy – I simply added a contact of primary styling to the textual content discipline and button.

Producing Responses with the Language Mannequin

Now we’ve come to the core a part of app: sending the query to the mannequin and producing the response. To deal with this, we create a brand new operate referred to as generateAnswer():

non-public func generateAnswer() async {

let session = LanguageModelSession()

do {

let response = strive await session.reply(to: query)

reply = response.content material

} catch {

reply = "Didn't reply the query: (error.localizedDescription)"

}

}

As you may see, it solely takes a couple of strains of code to ship a query to the mannequin and obtain a generated response. First, we create a session utilizing the default system language mannequin. Then, we cross the consumer’s query, which is named a immediate, to the mannequin utilizing the reply technique.

The decision is asynchronous because it often takes a couple of second (and even longer) for the mannequin to generate the response. As soon as the response is prepared, we are able to entry the generated textual content by the content material property and assign it to reply for show.

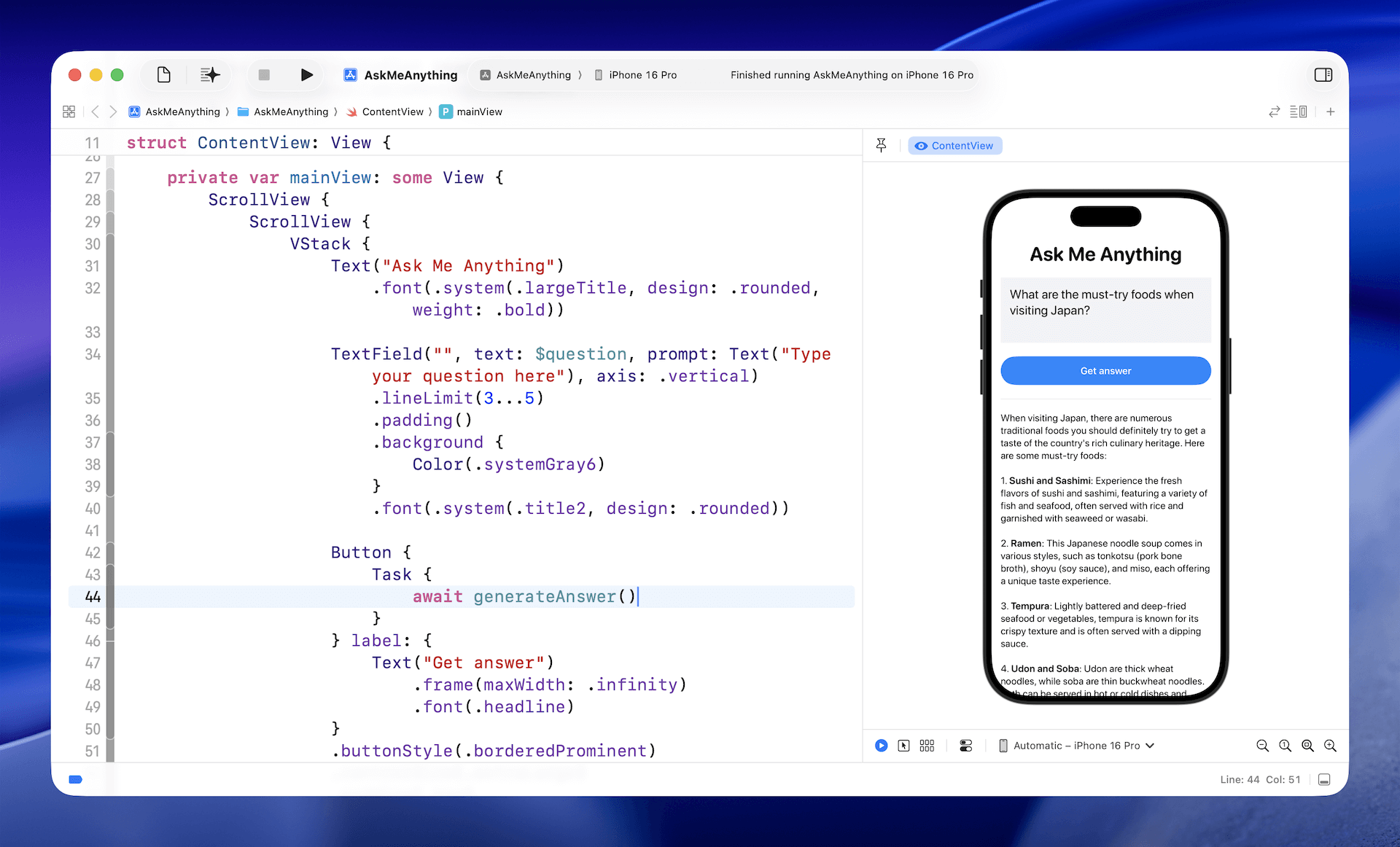

To invoke this new operate, we additionally must replace the closure of the “Get Reply” button like this:

Button {

Job {

await generateAnswer()

}

} label: {

Textual content("Present me the reply")

.body(maxWidth: .infinity)

.font(.headline)

}

You’ll be able to check the app immediately within the preview pane, or run it within the simulator. Simply sort in a query, wait a couple of seconds, and the app will generate a response for you.

Reusing the Session

The code above creates a brand new session for every query, which works nicely when the questions are unrelated.

However what if you would like customers to ask follow-up questions and preserve the context? In that case, you may merely reuse the identical session every time you name the mannequin.

For our demo app, we are able to transfer the session variable out of the generateAnswer() operate and switch it right into a state variable:

@State non-public var session = LanguageModelSession()

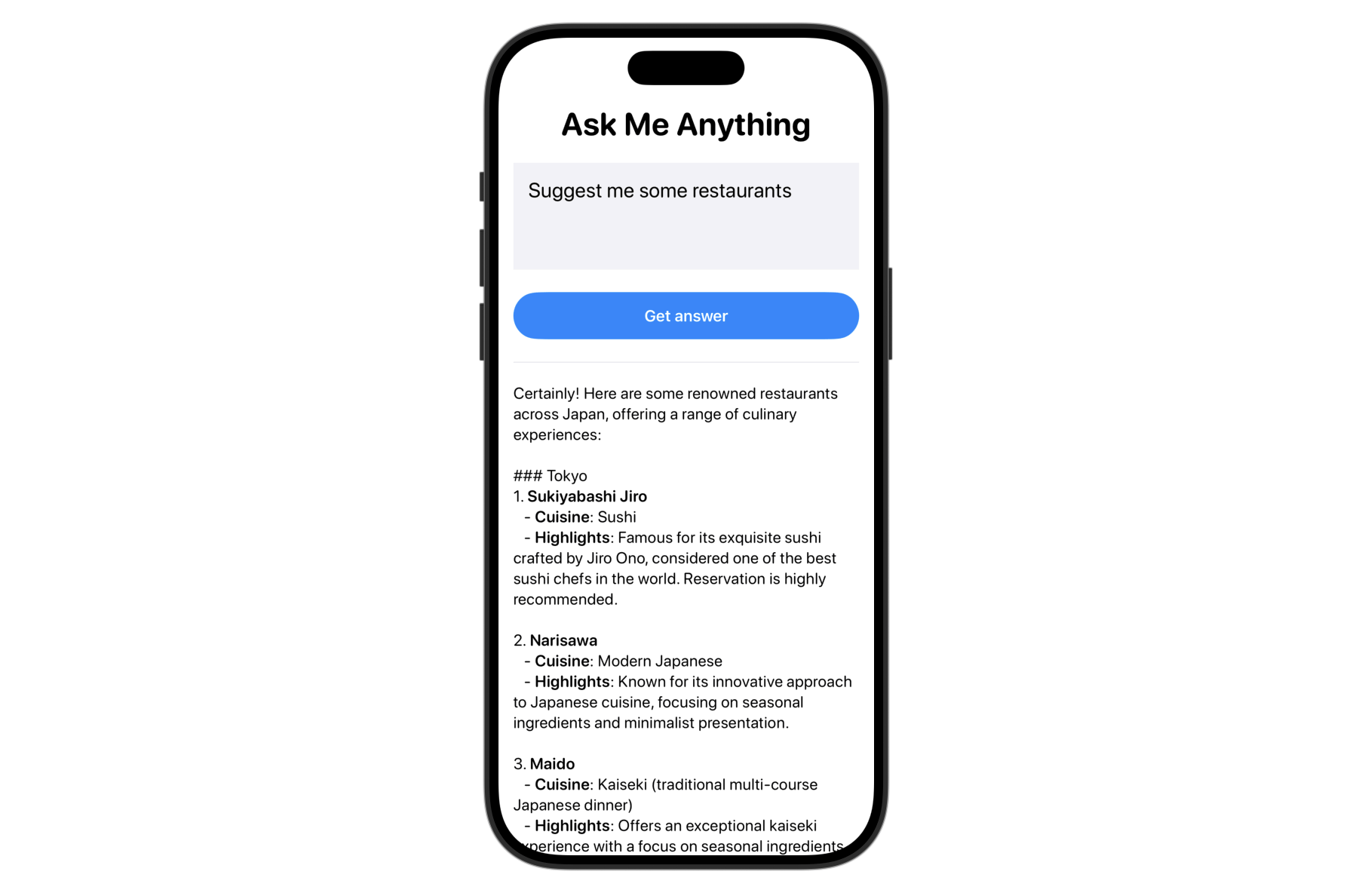

After making the change, strive testing the app by first asking: “What are the must-try meals when visiting Japan?” Then observe up with: “Counsel me some eating places.”

Because the session is retained, the mannequin understands the context and is aware of you are on the lookout for restaurant suggestions in Japan.

For those who don’t reuse the identical session, the mannequin received’t acknowledge the context of your follow-up query. As an alternative, it is going to reply with one thing like this, asking for extra particulars:

“Positive! To give you the most effective options, might you please let me know your location or the kind of delicacies you are considering?”

Disabling the Button Throughout Response Technology

Because the mannequin takes time to generate a response, it’s a good suggestion to disable the “Get Reply” button whereas ready for the reply. The session object features a property referred to as isResponding that allows you to examine if the mannequin is presently working.

To disable the button throughout that point, merely use the .disabled modifier and cross within the session’s standing like this:

Button {

Job {

await generateAnswer()

}

} label: {

.

.

.

}

.disabled(session.isResponding)

Working with Stream Responses

The present consumer expertise is not preferrred — for the reason that on-device mannequin takes time to generate a response, the app solely reveals the consequence after all the response is prepared.

For those who’ve used ChatGPT or related LLMs, you’ve in all probability observed that they begin displaying partial outcomes nearly instantly. This creates a smoother, extra responsive expertise.

The Basis Fashions framework additionally helps streaming output, which lets you show responses as they’re being generated, quite than ready for the whole reply. To implement this, use the streamResponse technique quite than the reply technique. This is the up to date generateAnswer() operate that works with streaming responses:

non-public func generateAnswer() async {

do {

reply = ""

let stream = session.streamResponse(to: query)

for strive await streamData in stream {

reply = streamData.asPartiallyGenerated()

}

} catch {

reply = "Didn't reply the query: (error.localizedDescription)"

}

}

Identical to with the reply technique, you cross the consumer’s query to the mannequin when calling streamResponse. The important thing distinction is that as an alternative of ready for the complete response, you may loop by the streamed knowledge and replace the reply variable with every partial consequence — displaying it on display because it’s generated.

Now while you check the app once more and ask any questions, you will see responses seem incrementally as they’re generated, creating a way more responsive consumer expertise.

Customizing the Mannequin with Directions

When instantiating the mannequin session, you may present non-obligatory directions to customise its use case. For the demo app, we’ve not supplied any directions throughout initialization as a result of this app is designed to reply any query.

Nevertheless, if you happen to’re constructing a Q&A system for particular subjects, chances are you’ll need to customise the mannequin with focused directions. For instance, in case your app is designed to reply travel-related questions, you may present the next instruction to the mannequin:

“You’re a educated and pleasant journey professional. Your job is to assist customers by answering travel-related questions clearly and precisely. Concentrate on offering helpful recommendation, suggestions, and details about locations, native tradition, transportation, meals, and journey planning. Maintain your tone conversational, useful, and simple to know, as if you happen to’re talking to somebody planning their subsequent journey.”

When writing directions, you may outline the mannequin’s position (e.g., journey professional), specify the main target of its responses, and even set the specified tone or type.

To cross the instruction to the mannequin, you may instantiate the session object like this:

var session = LanguageModelSession(directions: "your instruction")Abstract

On this tutorial, we coated the fundamentals of the Basis Fashions framework and confirmed how you can use Apple’s on-device language mannequin for duties like query answering and content material technology.

That is only the start — the framework gives far more. In future tutorials, we’ll dive deeper into different new options akin to the brand new @Generable and @Information macros, and discover extra capabilities like content material tagging and gear calling.

For those who’re trying to construct smarter, AI-powered apps, now could be the proper time to discover the Basis Fashions framework and begin integrating on-device intelligence into your tasks.