We’re excited to announce a number of updates to assist builders rapidly create AI options with better alternative and suppleness leveraging the Azure AI toolchain.

As builders proceed to develop and deploy AI purposes at scale throughout organizations, Azure is dedicated to delivering unprecedented alternative in fashions in addition to a versatile and complete toolchain to deal with the distinctive, advanced and various wants of contemporary enterprises. This highly effective mixture of the newest fashions and cutting-edge tooling empowers builders to create highly-customized options grounded of their group’s information. That’s why we’re excited to announce a number of updates to assist builders rapidly create AI options with better alternative and suppleness leveraging the Azure AI toolchain:

- Enhancements to the Phi household of fashions, together with a brand new Combination of Specialists (MoE) mannequin and 20+ languages.

- AI21 Jamba 1.5 Giant and Jamba 1.5 on Azure AI fashions as a service.

- Built-in vectorization in Azure AI Search to create a streamlined retrieval augmented era (RAG) pipeline with built-in information prep and embedding.

- Customized generative extraction fashions in Azure AI Doc Intelligence, so now you can extract customized fields for unstructured paperwork with excessive accuracy.

- The overall availability of Textual content to Speech (TTS) Avatar, a functionality of Azure AI Speech service, which brings natural-sounding voices and photorealistic avatars to life, throughout various languages and voices, enhancing buyer engagement and total expertise.

- The overall availability of Conversational PII Detection Service in Azure AI Language.

Use the Phi mannequin household with extra languages and better throughput

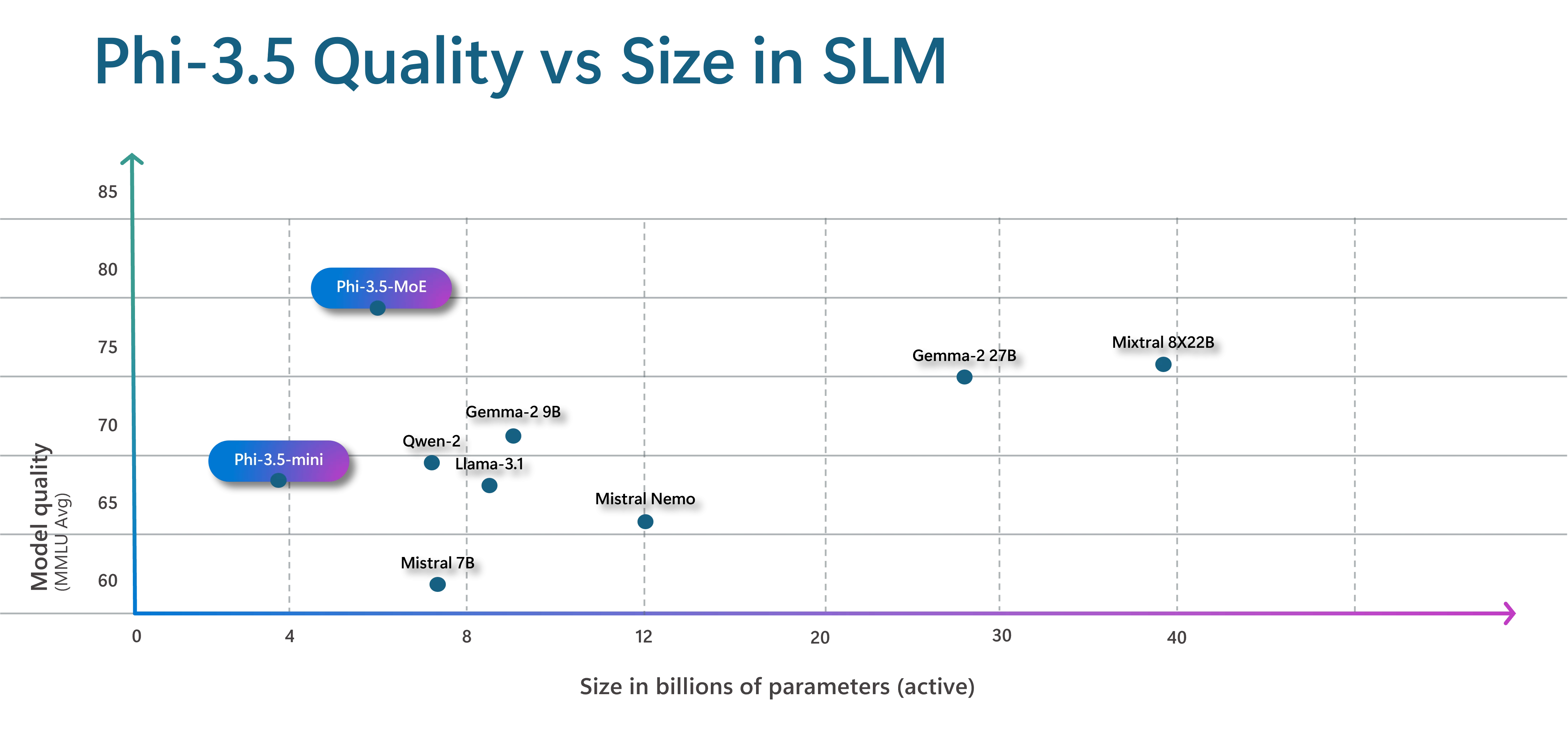

We’re introducing a brand new mannequin to the Phi household, Phi-3.5-MoE, a Combination of Specialists (MoE) mannequin. This new mannequin combines 16 smaller specialists into one, which delivers enhancements in mannequin high quality and decrease latency. Whereas the mannequin is 42B parameters, since it’s an MoE mannequin it solely makes use of 6.6B lively parameters at a time, by with the ability to specialize a subset of the parameters (specialists) throughout coaching, after which at runtime use the related specialists for the duty. This strategy provides prospects the advantage of the pace and computational effectivity of a small mannequin with the area data and better high quality outputs of a bigger mannequin. Learn extra about how we used a Combination of Specialists structure to enhance Azure AI translation efficiency and high quality.

We’re additionally asserting a brand new mini mannequin, Phi-3.5-mini. Each the brand new MoE mannequin and the mini mannequin are multi-lingual, supporting over 20 languages. The extra languages permit individuals to work together with the mannequin within the language they’re most snug utilizing.

Even with new languages the brand new mini mannequin, Phi-3.5-mini, remains to be a tiny 3.8B parameters.

Corporations like CallMiner, a conversational intelligence chief, are deciding on and utilizing Phi fashions for his or her pace, accuracy, and safety.

“CallMiner is continually innovating and evolving our dialog intelligence platform, and we’re excited concerning the worth Phi fashions are bringing to our GenAI structure. As we consider completely different fashions, we’ve continued to prioritize accuracy, pace, and safety... The small measurement of Phi fashions makes them extremely quick, and advantageous tuning has allowed us to tailor to the particular use instances that matter most to our prospects at excessive accuracy and throughout a number of languages. Additional, the clear coaching course of for Phi fashions empowers us to restrict bias and implement GenAI securely. We sit up for increasing our utility of Phi fashions throughout our suite of merchandise“—Bruce McMahon, CallMiner’s Chief Product Officer.

To make outputs extra predictable and outline the construction wanted by an utility, we’re bringing Steering to the Phi-3.5-mini serverless endpoint. Steering is a confirmed open-source Python library (with 18K plus GitHub stars) that permits builders to precise in a single API name the exact programmatic constraints the mannequin should comply with for structured output in JSON, Python, HTML, SQL, regardless of the use case requires. With Steering, you possibly can remove costly retries, and might, for instance, constrain the mannequin to pick out from pre-defined lists (e.g., medical codes), prohibit outputs to direct quotes from offered context, or comply with in any regex. Steering steers the mannequin token by token within the inference stack, producing larger high quality outputs and lowering price and latency by as a lot as 30-50% when using for extremely structured eventualities.

We’re additionally updating the Phi imaginative and prescient mannequin with multi-frame help. Which means that Phi-3.5-vision (4.2B parameters) permits reasoning over a number of enter pictures unlocking new eventualities like figuring out variations between pictures.

On the core of our product technique, Microsoft is devoted to supporting the event of secure and accountable AI, and supplies builders with a strong suite of instruments and capabilities.

Builders working with Phi fashions can assess high quality and security utilizing each built-in and customized metrics utilizing Azure AI evaluations, informing mandatory mitigations. Azure AI Content material Security supplies built-in controls and guardrails, resembling immediate shields and guarded materials detection. These capabilities could be utilized throughout fashions, together with Phi, utilizing content material filters or could be simply built-in into purposes by a single API. As soon as in manufacturing, builders can monitor their utility for high quality and security, adversarial immediate assaults, and information integrity, making well timed interventions with the assistance of real-time alerts.

Introducing AI21 Jamba 1.5 Giant and Jamba 1.5 on Azure AI fashions as a service

Furthering our purpose to supply builders with entry to the broadest collection of fashions, we’re excited to additionally announce two new open fashions, Jamba 1.5 Giant and Jamba 1.5, out there within the Azure AI mannequin catalog. These fashions use the Jamba structure, mixing Mamba, and Transformer layers for environment friendly long-context processing.

Based on AI21, the Jamba 1.5 Giant and Jamba 1.5 fashions are essentially the most superior within the Jamba collection. These fashions make the most of the Hybrid Mamba-Transformer structure, which balances pace, reminiscence, and high quality by using Mamba layers for short-range dependencies and Transformer layers for long-range dependencies. Consequently, this household of fashions excels in managing prolonged contexts perfect for industries together with monetary providers, healthcare, and life sciences, in addition to retail and CPG.

“We’re excited to deepen our collaboration with Microsoft, bringing the cutting-edge improvements of the Jamba Mannequin household to Azure AI customers…As a sophisticated hybrid SSM-Transformer (Structured State Area Mannequin-Transformer) set of basis fashions, the Jamba mannequin household democratizes entry to effectivity, low latency, prime quality, and long-context dealing with. These fashions empower enterprises with enhanced efficiency and seamless integration with the Azure AI platform”— Pankaj Dugar, Senior Vice President and Common Manger of North America at AI21

Simplify RAG for generative AI purposes

We’re streamlining RAG pipelines with built-in, finish to finish information preparation and embedding. Organizations typically use RAG in generative AI purposes to include data on non-public group particular information, with out having to retrain the mannequin. With RAG, you should utilize methods like vector and hybrid retrieval to floor related, knowledgeable data to a question, grounded in your information. Nevertheless, to carry out vector search, important information preparation is required. Your app should ingest, parse, enrich, embed, and index information of varied varieties, typically residing in a number of sources, simply in order that it may be utilized in your copilot.

As we speak we’re asserting normal availability of built-in vectorization in Azure AI Search. Built-in vectorization automates and streamlines these processes all into one circulate. With automated vector indexing and querying utilizing built-in entry to embedding fashions, your utility unlocks the total potential of what your information provides.

Along with bettering developer productiveness, integration vectorization permits organizations to supply turnkey RAG techniques as options for brand new tasks, so groups can rapidly construct an utility particular to their datasets and want, with out having to construct a customized deployment every time.

Clients like SGS & Co, a worldwide model influence group, are streamlining their workflows with built-in vectorization.

“SGS AI Visible Search is a GenAI utility constructed on Azure for our international manufacturing groups to extra successfully discover sourcing and analysis data pertinent to their challenge… Probably the most important benefit supplied by SGS AI Visible Search is using RAG, with Azure AI Search because the retrieval system, to precisely find and retrieve related property for challenge planning and manufacturing”—Laura Portelli, Product Supervisor, SGS & Co

Now you can extract customized fields for unstructured paperwork with excessive accuracy by constructing and coaching a customized generative mannequin inside Doc Intelligence. This new capacity makes use of generative AI to extract consumer specified fields from paperwork throughout all kinds of visible templates and doc varieties. You may get began with as few as 5 coaching paperwork. Whereas constructing a customized generative mannequin, automated labeling saves effort and time on guide annotation, outcomes will show as grounded the place relevant, and confidence scores can be found to rapidly filter prime quality extracted information for downstream processing and decrease guide evaluation time.

Create partaking experiences with prebuilt and customized avatars

As we speak we’re excited to announce that Textual content to Speech (TTS) Avatar, a functionality of Azure AI Speech service, is now usually out there. This service brings natural-sounding voices and photorealistic avatars to life, throughout various languages and voices, enhancing buyer engagement and total expertise. With TTS Avatar, builders can create customized and interesting experiences for his or her prospects and staff, whereas additionally bettering effectivity and offering modern options.

The TTS Avatar service supplies builders with a wide range of pre-built avatars, that includes a various portfolio of natural-sounding voices, in addition to an choice to create customized artificial voices utilizing Azure Customized Neural Voice. Moreover, the photorealistic avatars could be personalized to match an organization’s branding. For instance, Fujifilm is utilizing TTS Avatar with NURA, the world’s first AI-powered well being screening heart.

“Embracing the Azure TTS Avatar at NURA as our 24-hour AI assistant marks a pivotal step in healthcare innovation. At NURA, we envision a future the place AI-powered assistants redefine buyer interactions, model administration, and healthcare supply. Working with Microsoft, we’re honored to pioneer the subsequent era of digital experiences, revolutionizing how companies join with prospects and elevate model experiences, paving the way in which for a brand new period of customized care and engagement. Let’s convey extra smiles collectively”—Dr. Kasim, Govt Director and Chief Working Officer, Nura AI Well being Screening

As we convey this expertise to market, guaranteeing accountable use and improvement of AI stays our prime precedence. Customized Textual content to Speech Avatar is a restricted entry service wherein we now have built-in security and safety features. For instance, the system embeds invisible watermarks in avatar outputs. These watermarks permit authorised customers to confirm if a video has been created utilizing Azure AI Speech’s avatar function. Moreover, we offer pointers for TTS avatar’s accountable use, together with measures to advertise transparency in consumer interactions, establish and mitigate potential bias or dangerous artificial content material, and the best way to combine with Azure AI Content material Security. On this transparency observe, we describe the expertise and capabilities for TTS Avatar, its authorised use instances, concerns when selecting use instances, its limitations, equity concerns and greatest apply for bettering system efficiency. We additionally require all builders and content material creators to apply for entry and adjust to our code of conduct when utilizing TTS Avatar options together with prebuilt and customized avatars.

Use Azure Machine Studying assets in VS Code

We’re thrilled to announce the overall availability of the VS Code extension for Azure Machine Studying. The extension permits you to construct, prepare, deploy, debug, and handle machine studying fashions with Azure Machine Studying straight out of your favourite VS Code setup, whether or not on desktop or internet. With options like VNET help, IntelliSense and integration with Azure Machine Studying CLI, the extension is now prepared for manufacturing use. Learn this tech neighborhood weblog to study extra concerning the extension.

Clients like Fashable have put this into manufacturing.

“We have now been utilizing the VS Code extension for Azure Machine Studying since its preview launch, and it has considerably streamlined our workflow… The power to handle the whole lot from constructing to deploying fashions straight inside our most well-liked VS Code setting has been a game-changer. The seamless integration and strong options like interactive debugging and VNET help have enhanced our productiveness and collaboration. We’re thrilled about its normal availability and sit up for leveraging its full potential in our AI tasks.”—Ornaldo Ribas Fernandes, Co-founder and CEO, Fashable

Defend customers’ privateness

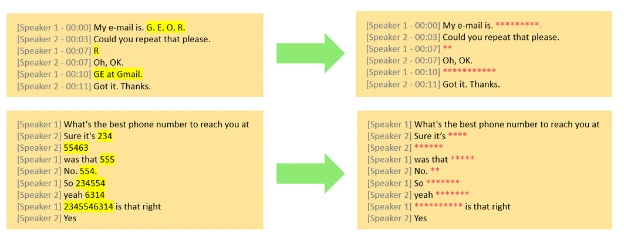

As we speak we’re excited to announce the overall availability of Conversational PII Detection Service in Azure AI Language, enhancing Azure AI’s capacity to establish and redact delicate data in conversations, beginning with English language. This service goals to enhance information privateness and safety for builders constructing generative AI apps for his or her enterprise. The Conversational PII redaction service expands upon the Textual content PII redaction service, supporting prospects seeking to establish, categorize, and redact delicate data resembling cellphone numbers and electronic mail addresses in unstructured textual content. This Conversational PII mannequin is specialised for conversational model inputs, significantly these present in speech transcriptions from conferences and calls.

Self-serve your Azure OpenAI Service PTUs

We not too long ago introduced updates to Azure OpenAI Service, together with the flexibility to handle your Azure OpenAI Service quota deployments with out counting on help out of your account group, permitting you to request Provisioned Throughput Models (PTUs) extra flexibly and effectively. We additionally launched OpenAI’s newest mannequin once they made it out there on 8/7, which launched Structured Outputs, like JSON Schemas, for the brand new GPT-4o and GPT-4o mini fashions. Structured outputs are significantly priceless for builders who have to validate and format AI outputs into constructions like JSON Schemas.

We proceed to take a position throughout the Azure AI stack to convey state-of-the-art innovation to our prospects so you possibly can construct, deploy, and scale your AI options safely and confidently. We can’t wait to see what you construct subsequent.

Keep updated with extra Azure AI information