Think about an AI utility that processes your voice, analyzes the digital camera feed, and engages in real-time human-like conversations. Till lately, to create such a tech-intensive multimodal utility, engineers struggled with the complexities of asynchronous operations, juggling a number of API calls, and piecing collectively code that later proved to be tough to take care of or debug. Steps in – GenAI Processors.

The revolutionary open-source Python library from Google DeepMind has cast new paths for builders considering AI Purposes. This library turns the chaotic panorama of AI growth right into a serene setting for builders. On this weblog, we’re going to find out how GenAI processors make complicated AI workflows extra accessible, which in flip will assist us construct a stay AI Agent.

What are GenAI Processors?

GenAI Processors is a brand new open-source Python library developed by DeepMind to supply construction and ease to the event challenges. They act as an abstraction that defines a typical processor interface from enter dealing with, pre-processing, precise mannequin calls, and even output processing.

Think about GenAI Processors because the frequent language between AI workflows. Somewhat than having to put in writing customized code from scratch for each part in your AI pipeline, you merely work with standardized “Processor” items which might be simple to mix, take a look at, and preserve. At its core, GenAI Processors views all enter and output as an asynchronous stream of ProcessorParts (bidirectional streaming). Standardized knowledge elements move by means of the pipeline (e.g., audio chunks, textual content transcriptions, picture frames) with accompanying metadata.

The Key ideas right here in GenAI Processors are:

- Processors: Particular person items of labor that take enter streams and produce output streams

- Processor Elements: Standardized knowledge chunks with metadata

- Streaming: Actual-time, bidirectional knowledge move by means of your pipeline

- Composition: Combining processors utilizing easy operations like +

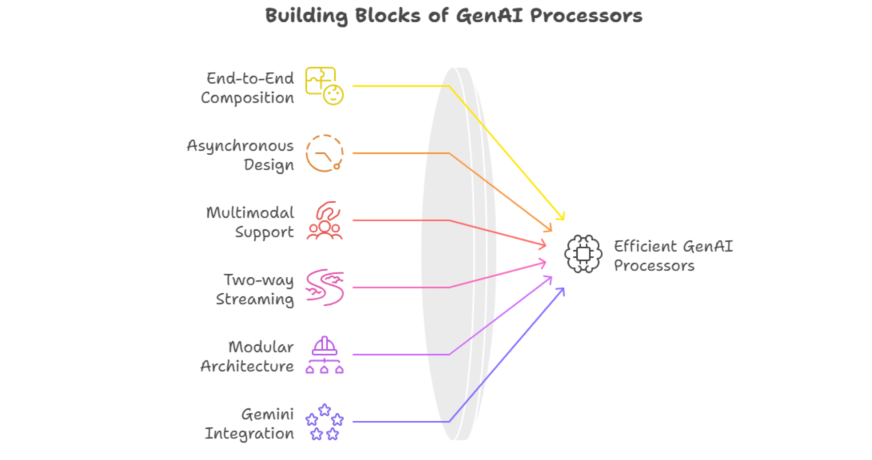

Key Options of GenAI Processors

- Finish-to-Finish Composition: That is carried out by becoming a member of operations with an intuitive syntax

Live_agent = input_processor + live_processor + play_output - Asynchronous design: Designed with Python’s asynchio for environment friendly dealing with of I/O-bound and pure compute-bound duties with guide threading.

- Multimodal Help: Deal with textual content, audio, video, and picture below a single unified interface through the ProcessorPart wrapper

- Two-way Streaming: Enable parts to speak two-way in real-time, thus favoring interactivity.

- Modular Structure: Reusable and testable parts that ease the upkeep of intricate pipelines to a terrific extent.

- Gemini Integration: Native assist for Gemini Dwell API and customary text-based LLM Operations.

The best way to Set up GenAI Processors?

Getting began with GenAI Processors is fairly easy:

Conditions

- Python 3.8+

- Pip package deal supervisor

- Google Cloud account (For Gemini API entry)

Set up Steps

1. Set up the library:

pip set up genai-processors2. Organising for Authentication:

# For Google AI Studio

export GOOGLE_API_KEY="your-api-key"

# Or for Google Cloud

gcloud auth application-default login3. Checking the Set up:

import genai_processors

print(genai_processors.__version__)4. Growth Setup (Elective)

# Clone for examples or contributions

git clone https://github.com/google-gemini/genai-processors.git

cd genai-processors

pip set up -eHow GenAI Processors work?

GenAI Processors exist by way of a stream-based processing mode, whereby knowledge flows alongside a pipeline of linked processors. Every processor:

- Receives a stream of ProcessorParts

- Processes the information (transformation, API calls, and so on.)

- Outputs a stream of outcomes

- Passes outcomes to the subsequent processor within the chain

Knowledge Stream Instance

Audio Enter → Speech to Textual content → LLM Processing → Textual content to Speech → Audio Output

↓ ↓ ↓ ↓ ↓

ProcessorPart → ProcessorPart → ProcessorPart → ProcessorPart → ProcessorPart

Core Parts

The core parts of GenAI Processors are:

1. Enter Processors

- VideoIn(): Processing of the digital camera stream

- PyAudioIn(): Microphone enter

- FileInput(): File enter

2. Processing Processors

- LiveProcessor(): Integration of Gemini Dwell API

- GenaiModel(): Commonplace LLM processing

- SpeechToText(): Transcription of audio

- TextToSpeech(): Voice synthesis

3. Output Processors

- PyAudioOut(): Audio playback

- FileOutput(): File writing

- StreamOutput(): Actual-time streaming

Concurrency and Efficiency

To begin with, GenAI Processors have been designed to maximise concurrent execution of a Processor. Any a part of this instance execution move could also be run concurrently every time all of its ancestors within the graph are computed. In different phrases, your utility would primarily be processing a number of knowledge streams concurrently, and speed up response time and consumer expertise.

Palms-On: Constructing a Dwell Agent utilizing GenAI Processors

So, let’s construct an entire stay AI agent that joins the digital camera and audio streams, sends them to the Gemini Dwell API for processing, and eventually will get again audio responses.

Observe: Should you want to be taught all about AI brokers, be a part of our full AI Agentic Pioneer program right here.

Challenge Construction

That is how our Challenge construction would look:

live_agent/

├── foremost.py

├── config.py

└── necessities.txt

Step 1: Configuration Step

import os

from genai_processors.core import audio_io

# API configuration

GOOGLE_API_KEY = os.getenv("GOOGLE_API_KEY")

if not GOOGLE_API_KEY:

elevate ValueError("Please set GOOGLE_API_KEY setting variable")

# Audio configuration

AUDIO_CONFIG = audio_io.AudioConfig(

sample_rate=16000,

channels=1,

chunk_size=1024,

format="int16"

)

# Video configuration

VIDEO_CONFIG = {

"width": 640,

"top": 480,

"fps": 30

}Step 2: Core Agent Implementation

import asyncio

from genai_processors.core import (

audio_io,

live_model,

video,

streams

)

from config import AUDIO_CONFIG, VIDEO_CONFIG, GOOGLE_API_KEY

class LiveAgent:

def __init__(self):

self.setup_processors()

def setup_processors(self):

"""Initialize all processors for the stay agent"""

# Enter processor: combines digital camera and microphone

self.input_processor = (

video.VideoIn(

device_id=0,

width=VIDEO_CONFIG["width"],

top=VIDEO_CONFIG["height"],

fps=VIDEO_CONFIG["fps"]

) +

audio_io.PyAudioIn(

config=AUDIO_CONFIG,

device_index=None # Use default microphone

)

)

# Gemini Dwell API processor

self.live_processor = live_model.LiveProcessor(

api_key=GOOGLE_API_KEY,

model_name="gemini-2.0-flash-exp",

system_instruction="You're a useful AI assistant. Reply naturally to consumer interactions."

)

# Output processor: handles audio playback with interruption assist

self.output_processor = audio_io.PyAudioOut(

config=AUDIO_CONFIG,

device_index=None, # Use default speaker

enable_interruption=True

)

# Full agent pipeline

self.agent = (

self.input_processor +

self.live_processor +

self.output_processor

)

async def run(self):

"""Begin the stay agent"""

print("🤖 Dwell Agent beginning...")

print("🎥 Digital camera and microphone lively")

print("🔊 Audio output prepared")

print("💬 Begin talking to work together!")

print("Press Ctrl+C to cease")

attempt:

async for half in self.agent(streams.endless_stream()):

# Course of several types of output

if half.part_type == "textual content":

print(f"🤖 AI: {half.textual content}")

elif half.part_type == "audio":

print(f"🔊 Audio chunk: {len(half.audio_data)} bytes")

elif half.part_type == "video":

print(f"🎥 Video body: {half.width}x{half.top}")

elif half.part_type == "metadata":

print(f"📊 Metadata: {half.metadata}")

besides KeyboardInterrupt:

print("n👋 Dwell Agent stopping...")

besides Exception as e:

print(f"❌ Error: {e}")

# Superior agent with customized processing

class CustomLiveAgent(LiveAgent):

def __init__(self):

tremendous().__init__()

self.conversation_history = []

self.user_emotions = []

def setup_processors(self):

"""Enhanced setup with customized processors"""

from genai_processors.core import (

speech_to_text,

text_to_speech,

genai_model,

realtime

)

# Customized enter processing with STT

self.input_processor = (

audio_io.PyAudioIn(config=AUDIO_CONFIG) +

speech_to_text.SpeechToText(

language="en-US",

interim_results=True

)

)

# Customized mannequin with dialog reminiscence

self.genai_processor = genai_model.GenaiModel(

api_key=GOOGLE_API_KEY,

model_name="gemini-pro",

system_instruction="""You might be an empathetic AI assistant.

Keep in mind our dialog historical past and reply with emotional intelligence.

If the consumer appears upset, be supportive. In the event that they're excited, share their enthusiasm."""

)

# Customized TTS with emotion

self.tts_processor = text_to_speech.TextToSpeech(

voice_name="en-US-Neural2-J",

speaking_rate=1.0,

pitch=0.0

)

# Audio charge limiting for easy playback

self.rate_limiter = audio_io.RateLimitAudio(

sample_rate=AUDIO_CONFIG.sample_rate

)

# Full customized pipeline

self.agent = (

self.input_processor +

realtime.LiveModelProcessor(

turn_processor=self.genai_processor + self.tts_processor + self.rate_limiter

) +

audio_io.PyAudioOut(config=AUDIO_CONFIG)

)

if __name__ == "__main__":

# Select your agent kind

agent_type = enter("Select agent kind (1: Easy, 2: Customized): ")

if agent_type == "2":

agent = CustomLiveAgent()

else:

agent = LiveAgent()

# Run the agent

asyncio.run(agent.run())Step 3: Enhanced options

Let’s add emotion detection and response customization

class EmotionAwareLiveAgent(LiveAgent):

def __init__(self):

tremendous().__init__()

self.emotion_history = []

async def process_with_emotion(self, text_input):

"""Course of enter with emotion consciousness"""

# Easy emotion detection (in follow, use extra refined strategies)

feelings = {

"comfortable": ["great", "awesome", "fantastic", "wonderful"],

"unhappy": ["sad", "disappointed", "down", "upset"],

"excited": ["amazing", "incredible", "wow", "fantastic"],

"confused": ["confused", "don't understand", "what", "how"]

}

detected_emotion = "impartial"

for emotion, key phrases in feelings.objects():

if any(key phrase in text_input.decrease() for key phrase in key phrases):

detected_emotion = emotion

break

self.emotion_history.append(detected_emotion)

return detected_emotion

def get_emotional_response_style(self, emotion):

"""Customise response based mostly on detected emotion"""

kinds = {

"comfortable": "Reply with enthusiasm and positivity!",

"unhappy": "Reply with empathy and assist. Provide assist.",

"excited": "Match their pleasure! Use energetic language.",

"confused": "Be affected person and explanatory. Break down complicated concepts.",

"impartial": "Reply naturally and helpfully."

}

return kinds.get(emotion, kinds["neutral"])Step 4: Operating the Agent

necessities.txt

genai-processors>=0.1.0

google-generativeai>=0.3.0

pyaudio>=0.2.11

opencv-python>=4.5.0

asyncio>=3.4.3Instructions to run the agent:

pip set up -r necessities.txt

python foremost.pyBenefits of GenAI Processors

- Simplified Growth Expertise: GenAI Processors eliminates all the complexities arising from managing a number of API calls and asynchronous operations. Builders can immediately channel their consideration into constructing options reasonably than infrastructure code; as such, this reduces not solely growth time but additionally potential bugs.

- Unified Multimodal Interface: The library presents a single, constant interface for interacting with textual content, audio, video, and picture knowledge by means of ProcessorPart wrappers. This implies you’ll not should be taught completely different APIs for various knowledge varieties, and that can simply simplify your life.

- Actual-Time Efficiency: Natively constructed with Python’s asyncio, GenAI Processors shines when dealing with concurrent operations and streaming knowledge. This structure ensures minimal latency and easy real-time interactions – the type of execution wanted for stay purposes reminiscent of voice assistants or interactive video processing.

- Modular and Reusable Structure: Made modular, parts shall be a lot simpler to check, debug, and preserve. You possibly can swap processors at will or add new capabilities and alter workflows with out having to rewrite complete techniques.

Limitations of GenAI Processors

- Google Ecosystem Dependency: Supported are completely different AI fashions; nevertheless, very a lot optimized for Google’s AI providers. Builders relying upon different AI suppliers may not be capable to take pleasure in such a seamless integration and must do some further settings.

- Studying Curve for Advanced Workflows: The essential ideas are easy; nevertheless, refined multimodal apps require data of asynchronous programming patterns and stream-processing ideas, which could be tough for learners.

- Restricted Neighborhood and Documentation: As a comparatively new open-source DeepMind venture, group sources, tutorials, and third-party extensions are nonetheless evolving, making superior troubleshooting and instance discovering extra difficult.

- Useful resource Intensive: Computationally costly is its requirement in real-time multimodal processing, particularly so in video streams with audio and textual content. Such purposes would devour substantial system sources and have to be suitably optimized for manufacturing deployment.

Use Instances of GenAI Processors

- Interactive Buyer Service Bots: Constructing actually superior customer support brokers that may course of voice calls, analyze buyer feelings through video, and provides contextual replies-in addition to permitting real-time pure conversations with hardly a little bit of latency.

- Educators: AI Tutors-One could design personalised studying assistants that see pupil facial expressions, course of spoken questions, and supply explanations through textual content, audio, and visible aids in real-time, adjusting to the training fashion of every particular person.

- Healthcare or medical monitoring: Monitor sufferers’ very important indicators through video and their voice patterns for early illness detection; then combine this with medical databases for full health-assessment.

- Content material Creation and Media Manufacturing: Construct for-the-moment video enhancing, automated podcast era, or in-the-moment stay streaming with AI responding to viewers reactions, producing captions, and dynamically enhancing content material.

Conclusion

GenAI Processors signifies a paradigm shift in growing AI purposes, turning complicated and disconnected workflows into elegant and maintainable options. By way of a typical interface with which to conduct multimodal AI processing, builders can innovate options as a substitute of coping with the infrastructure issues.

Therefore, if streaming, multimodal, and responsive is the long run for AI purposes, then GenAI Processors can present that at the moment. If you wish to construct the subsequent massive customer support bots or academic assistants, or artistic instruments, GenAI Processors is your base for achievement.

Regularly Requested Questions

GenAI Processors is totally open-source and free to make use of. Nonetheless, you’ll incur prices for the underlying AI providers you combine with, reminiscent of Google’s Gemini API, speech-to-text providers, or cloud computing sources. These prices rely in your utilization quantity and the particular providers you select to combine into your processors.

Sure, whereas GenAI Processors is optimized for Google’s AI ecosystem, its modular structure permits integration with different AI suppliers. You possibly can create customized processors that work with OpenAI, Anthropic, or some other AI service by implementing the processor interface, although it’s possible you’ll must deal with further configuration and API administration your self.

You want Python 3.8+, enough RAM in your particular use case (minimal 4GB advisable for primary purposes, 8GB+ for video processing), and a steady web connection for API calls. For real-time video processing, a devoted GPU can considerably enhance efficiency, although it’s not strictly required for all use circumstances.

GenAI Processors processes knowledge in accordance with your configuration – you management the place knowledge is distributed and saved. When utilizing cloud AI providers, knowledge privateness depends upon your chosen supplier’s insurance policies. For delicate purposes, you possibly can implement native processing or use on-premises AI fashions, although this will require further setup and customized processor growth.

Completely! GenAI Processors is designed for manufacturing use with its asynchronous structure and environment friendly useful resource administration. Nonetheless, you’ll want to think about components like error dealing with, monitoring, scaling, and charge limiting based mostly in your particular necessities. The library offers constructing blocks, however manufacturing deployment requires further infrastructure issues like load balancing and monitoring techniques.

Login to proceed studying and luxuriate in expert-curated content material.