In as we speak’s quickly evolving AI panorama, organizations face a vital problem: the right way to harness the transformative energy of generative AI whereas sustaining strong safety and compliance requirements. As enterprises deploy more and more subtle GenAI purposes, the necessity for complete safety throughout your entire AI lifecycle has by no means been extra pressing.

At present, Cisco is happy to announce a local integration of Cisco AI Protection runtime guardrails with NVIDIA NeMo Guardrails, part of NVIDIA Enterprise software program, bringing collectively two highly effective options to maximise cybersecurity for enterprise AI deployments.

Why Guardrails Matter: The Vital First Line of Protection

Generative AI purposes are essentially completely different from conventional software program. They’re dynamic, probabilistic, and may produce surprising outputs primarily based on consumer interactions. With out correct safeguards, GenAI purposes can generate dangerous, biased, or inappropriate content material, leak delicate data by way of immediate injection assaults, hallucinate details, deviate from meant use instances, or violate regulatory compliance necessities.

Runtime guardrails function the important security mechanisms that monitor and management AI conduct in real-time. Consider them as clever visitors controllers that guarantee your AI purposes keep inside secure, compliant boundaries whereas sustaining efficiency and consumer expertise. As organizations transfer from AI experimentation to manufacturing deployments, these guardrails have change into non-negotiable parts of any accountable AI technique.

Guardrails are solely as efficient as their underlying detection fashions and the frequency of updates made to seize the newest risk intelligence. Enterprises shouldn’t depend on the built-in guardrails created by mannequin builders, as they’re completely different for every mannequin, largely optimized for efficiency over safety, and alignment is well damaged when adjustments to the mannequin are made. Enterprise guardrails, comparable to these by Cisco AI Protection and NVIDIA NeMo, present a typical layer of safety throughout fashions, permitting AI groups to focus totally on improvement.

NVIDIA NeMo Guardrails: A Main Open-Supply Toolkit

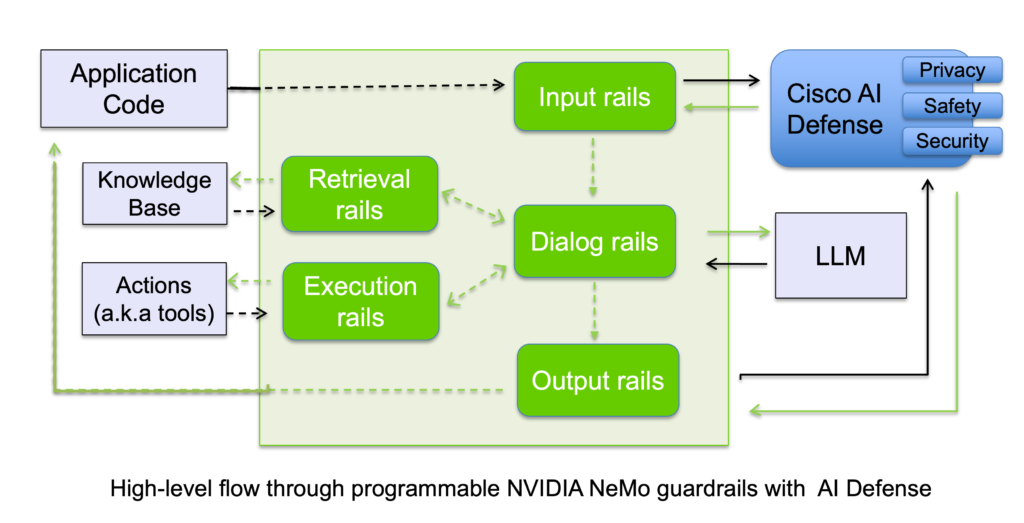

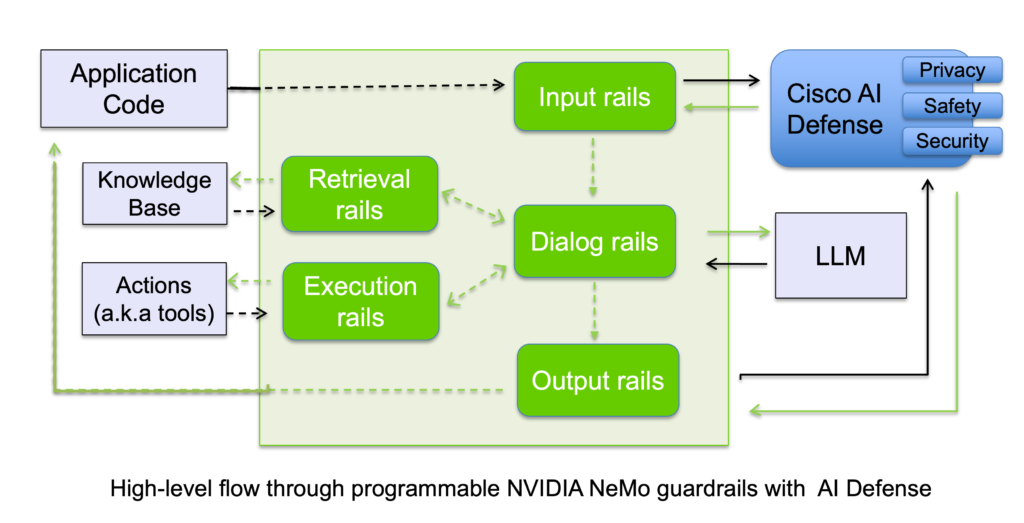

NVIDIA NeMo Guardrails has emerged as a number one open-source framework for constructing programmable guardrails for generative AI purposes. This highly effective toolkit allows builders to outline enter and output boundaries for LLM interactions, implement topical guardrails to maintain conversations on observe, implement fact-checking and hallucination prevention, and management dialogue movement and consumer interplay patterns. As a framework-level answer, NeMo Guardrails gives the structural basis for AI security, giving builders the pliability to outline guidelines and insurance policies tailor-made to their particular use instances.

The framework’s widespread adoption throughout the trade displays its strong structure and developer-friendly strategy. Organizations respect the power to create customized guardrails that align with their distinctive enterprise necessities whereas leveraging NVIDIA AI infrastructure and acceleration.

Cisco AI Protection: A Complete AI Safety Answer

Runtime guardrails, whereas important, are only one piece of the AI safety puzzle. Cisco AI Protection takes a holistic strategy to AI safety, defending organizations throughout your entire AI lifecycle from improvement by way of manufacturing.

AI Protection makes use of a three-step framework to guard towards AI security, safety and privateness dangers:

- Discovery: robotically stock AI belongings together with fashions, brokers, information bases, and vector shops throughout your distributed cloud environments.

- Detection: uncover mannequin and utility vulnerabilities, together with provide chain dangers and susceptibility to jailbreaks, unsafe responses, and extra.

- Safety: defend runtime purposes with proprietary security, safety, and privateness guardrails, up to date with the newest risk intelligence.

The safety journey doesn’t finish at deployment. Cisco AI Protection gives steady validation by way of ongoing testing to determine new vulnerabilities in fashions and purposes. As new dangers emerge, further guardrails will be launched to handle these or fashions will be swapped. This ensures that deployed fashions keep their safety posture over time and proceed to fulfill inside and exterior requirements.

Relatively than leaving safety implementation to particular person utility groups, organizations can implement enterprise-wide runtime controls that align AI conduct with company safety and compliance necessities. By means of its integration with NVIDIA NeMo Guardrails, Cisco AI Protection makes these controls seamlessly accessible inside developer workflows, embedding safety as a local a part of the AI improvement lifecycle. This steady validation and centralized safety mannequin ensures deployed fashions and purposes keep a robust safety posture over time, whereas vulnerability reviews translate findings into clear insights mapped to trade and regulatory requirements.

Higher Collectively: Boosting Cybersecurity Defenses with Cisco Accelerated by NVIDIA

The native integration of Cisco AI Protection with NVIDIA NeMo Guardrails delivers highly effective cybersecurity for enterprise AI deployments. Relatively than counting on a single layer of safety, this integration provides builders the pliability to mix the simplest guardrails for every facet of their purposes—whether or not centered on security, safety, privateness, or conversational movement and subject management.

By bringing collectively NVIDIA NeMo Guardrails’ open-source framework for outlining and imposing conversational and contextual boundaries with Cisco AI Protection’s enterprise-grade runtime guardrails for safeguarding knowledge, detecting threats, and sustaining compliance, organizations achieve a modular and interoperable structure for shielding AI in manufacturing.

This collaboration allows builders to combine and match guardrails throughout domains, guaranteeing that AI techniques behave responsibly, securely, and constantly—with out sacrificing efficiency or agility. NeMo Guardrails gives the inspiration for structured, customizable controls inside AI workflows, whereas Cisco AI Protection provides repeatedly up to date runtime safety powered by real-time risk intelligence.

Collectively, they permit coordinated guardrail layers that stretch throughout the AI lifecycle—from how purposes handle delicate data to how they work together with customers—making a unified and adaptable protection technique. With this native integration, enterprises can innovate sooner whereas sustaining confidence that their AI techniques are protected by the best safeguards for each stage of operation.

Cisco Safe AI Manufacturing unit with NVIDIA

Understanding that each group has distinctive infrastructure necessities and safety insurance policies, Cisco and NVIDIA have partnered to supply a validated reference structure to securely energy AI workloads in a buyer’s surroundings. We provide two deployment choices for the information airplane: cloud-based or on-premises with Cisco AI PODs.

At present, we’re asserting orderability of Cisco AI Protection on AI PODs with our knowledge airplane deployed on-premises. This can be deployed alongside NVIDIA NeMo Guardrails. Which means corporations dealing with strict knowledge sovereignty necessities, compliance mandates, or operational wants can obtain AI utility safety for on-premises deployments.

The Path Ahead: Safe AI Innovation

As organizations speed up their AI transformation journeys, safety can’t be an afterthought. The native integration of Cisco AI Protection with NVIDIA NeMo Guardrails, delivered by way of Cisco Safe AI Manufacturing unit, represents a brand new commonplace for enterprise AI safety—one which doesn’t drive you to decide on between innovation and safety.

With this highly effective mixture, you possibly can deploy GenAI purposes with confidence, understanding that a number of layers of protection are working in live performance to guard your group. You may meet essentially the most stringent safety and compliance necessities with out sacrificing efficiency or consumer expertise. You keep the pliability to evolve your infrastructure as your wants change and as AI expertise advances. Maybe most significantly, you leverage the mixed experience of two AI trade leaders who’re each dedicated to creating AI secure, safe, and accessible for enterprises.