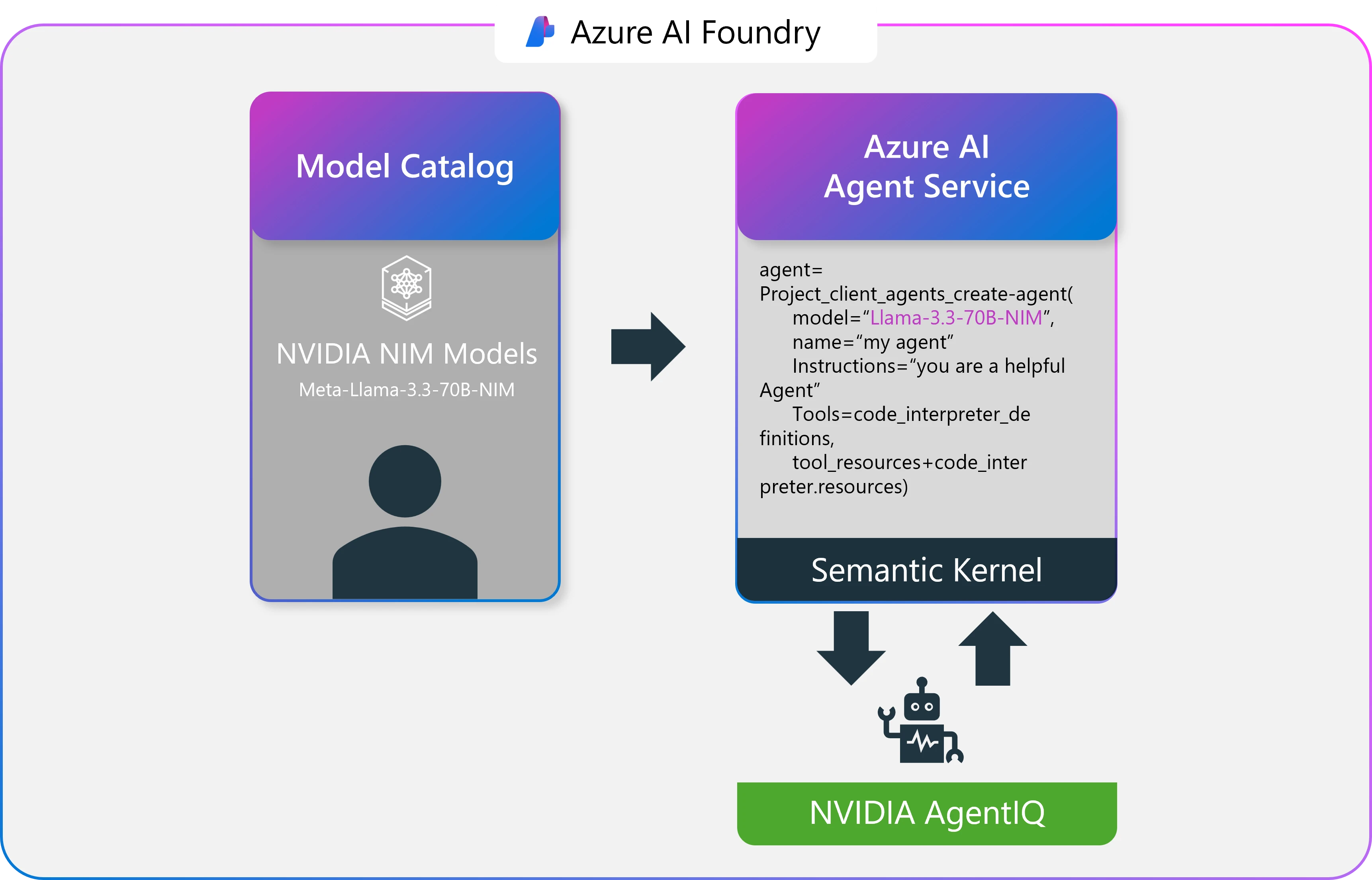

In collaboration with Microsoft and NVIDIA, we have built-in NVIDIA NIM microservices and NVIDIA AgentIQ toolkit into Azure AI Foundry—unlocking unprecedented effectivity, efficiency, and value optimization in your AI tasks.

I’m excited to share a significant leap ahead in how we develop and deploy AI. In collaboration with NVIDIA, we’ve built-in NVIDIA NIM microservices and NVIDIA AgentIQ toolkit into Azure AI Foundry—unlocking unprecedented effectivity, efficiency, and value optimization in your AI tasks.

A brand new period of AI effectivity

In right this moment’s fast-paced digital panorama, scaling AI functions calls for extra than simply innovation—it requires streamlined processes that ship fast time-to-market with out compromising on efficiency. With enterprise AI tasks typically taking 9 to 12 months to maneuver from conception to manufacturing, each effectivity achieve counts. Our integration is designed to vary that by simplifying each step of the AI improvement lifecycle.

NVIDIA NIM on Azure AI Foundry

NVIDIA NIM™, a part of the NVIDIA AI Enterprise software program suite, is a set of easy-to-use microservices engineered for safe, dependable, and high-performance AI inferencing. Leveraging sturdy applied sciences resembling NVIDIA Triton Inference Server™, TensorRT™, TensorRT-LLM, and PyTorch, NIM microservices are constructed to scale seamlessly on managed Azure compute.

They supply:

- Zero-configuration deployment: Rise up and working rapidly with out-of-the-box optimization.

- Seamless Azure integration: Works effortlessly with Azure AI Agent Service and Semantic Kernel.

- Enterprise-grade reliability: Profit from NVIDIA AI Enterprise help for steady efficiency and safety.

- Scalable inference: Faucet into Azure’s NVIDIA accelerated infrastructure for demanding workloads.

- Optimized workflows: Speed up functions starting from giant language fashions to superior analytics.

Deploying these companies is straightforward. With just some clicks—whether or not choosing fashions just like the Llama-3.3-70B-NIM or others from the mannequin catalog in Azure AI Foundry—you may combine them immediately into your AI workflows and begin constructing generative AI functions that work flawlessly throughout the Azure ecosystem.

Optimizing efficiency with NVIDIA AgentIQ

As soon as your NVIDIA NIM microservices are deployed, NVIDIA AgentIQ takes heart stage. This open-source toolkit is designed to seamlessly join, profile, and optimize groups of AI brokers, allows your techniques to run at peak efficiency. AgentIQ delivers:

- Profiling and optimization: Leverage real-time telemetry to fine-tune AI agent placement, lowering latency and compute overhead.

- Dynamic inference enhancements: Repeatedly gather and analyze metadata—resembling predicted output tokens per name, estimated time to subsequent inference, and anticipated token lengths—to dynamically enhance agent efficiency.

- Integration with Semantic Kernel: Direct integration with Azure AI Foundry Agent Service additional empowers your brokers with enhanced semantic reasoning and activity execution capabilities.

This clever profiling not solely reduces compute prices but in addition boosts accuracy and responsiveness, so that each a part of your agentic AI workflow is optimized for fulfillment.

As well as, we are going to quickly be integrating the NVIDIA Llama Nemotron Purpose open reasoning mannequin. NVIDIA Llama Nemotron Purpose is a robust AI mannequin household designed for superior reasoning. In accordance to NVIDIA, Nemotron excels at coding, advanced math, and scientific reasoning whereas understanding consumer intent and seamlessly calling instruments like search and translations to perform duties.

Actual-world affect

Trade leaders are already witnessing the advantages of those improvements.

Drew McCombs, Vice President, Cloud and Analytics at Epic, famous:

The launch of NVIDIA NIM microservices in Azure AI Foundry affords a safe and environment friendly approach for Epic to deploy open-source generative AI fashions that enhance affected person care, increase clinician and operational effectivity, and uncover new insights to drive medical innovation. In collaboration with UW Well being and UC San Diego Well being, we’re additionally researching strategies to judge scientific summaries with these superior fashions. Collectively, we’re utilizing the most recent AI expertise in ways in which really enhance the lives of clinicians and sufferers.

Epic’s expertise underscores how our built-in resolution can drive transformational change—not simply in healthcare however throughout each {industry} the place high-performance AI is a recreation changer. As famous by Jon Sigler, EVP, Platform and AI at ServiceNow:

This mix of ServiceNow’s AI platform with NVIDIA NIM and Microsoft Azure AI Foundry and Azure AI Agent Service helps us deliver to market industry-specific, out-of-the-box AI brokers, delivering full-stack agentic AI options to assist resolve issues sooner, ship nice buyer experiences, and speed up enhancements in organizations’ productiveness and effectivity.

Unlock AI-powered innovation

By combining the sturdy deployment capabilities of NVIDIA NIM with the dynamic optimization of NVIDIA AgentIQ, Azure AI Foundry supplies a turnkey resolution for constructing, deploying, and scaling enterprise-grade agentic functions. This integration can speed up AI deployments, improve agentic workflows, and scale back infrastructure prices—enabling you to give attention to what really issues: driving innovation.

Able to speed up your AI journey?

Deploy NVIDIA NIM microservices and optimize your AI brokers with NVIDIA AgentIQ toolkit on Azure AI Foundry. Discover extra in regards to the Azure AI Foundry mannequin catalog.

Let’s construct a better, sooner, and extra environment friendly future collectively.