We deployed 100 reinforcement studying (RL)-controlled vehicles into rush-hour freeway visitors to easy congestion and scale back gas consumption for everybody. Our purpose is to deal with “stop-and-go” waves, these irritating slowdowns and speedups that normally haven’t any clear trigger however result in congestion and important power waste. To coach environment friendly flow-smoothing controllers, we constructed quick, data-driven simulations that RL brokers work together with, studying to maximise power effectivity whereas sustaining throughput and working safely round human drivers.

General, a small proportion of well-controlled autonomous autos (AVs) is sufficient to considerably enhance visitors move and gas effectivity for all drivers on the street. Furthermore, the skilled controllers are designed to be deployable on most fashionable autos, working in a decentralized method and counting on normal radar sensors. In our newest paper, we discover the challenges of deploying RL controllers on a large-scale, from simulation to the sphere, throughout this 100-car experiment.

The challenges of phantom jams

A stop-and-go wave shifting backwards by freeway visitors.

Should you drive, you’ve absolutely skilled the frustration of stop-and-go waves, these seemingly inexplicable visitors slowdowns that seem out of nowhere after which out of the blue clear up. These waves are sometimes brought on by small fluctuations in our driving conduct that get amplified by the move of visitors. We naturally alter our pace primarily based on the car in entrance of us. If the hole opens, we pace as much as sustain. In the event that they brake, we additionally decelerate. However as a consequence of our nonzero response time, we would brake only a bit tougher than the car in entrance. The subsequent driver behind us does the identical, and this retains amplifying. Over time, what began as an insignificant slowdown turns right into a full cease additional again in visitors. These waves transfer backward by the visitors stream, resulting in important drops in power effectivity as a consequence of frequent accelerations, accompanied by elevated CO2 emissions and accident danger.

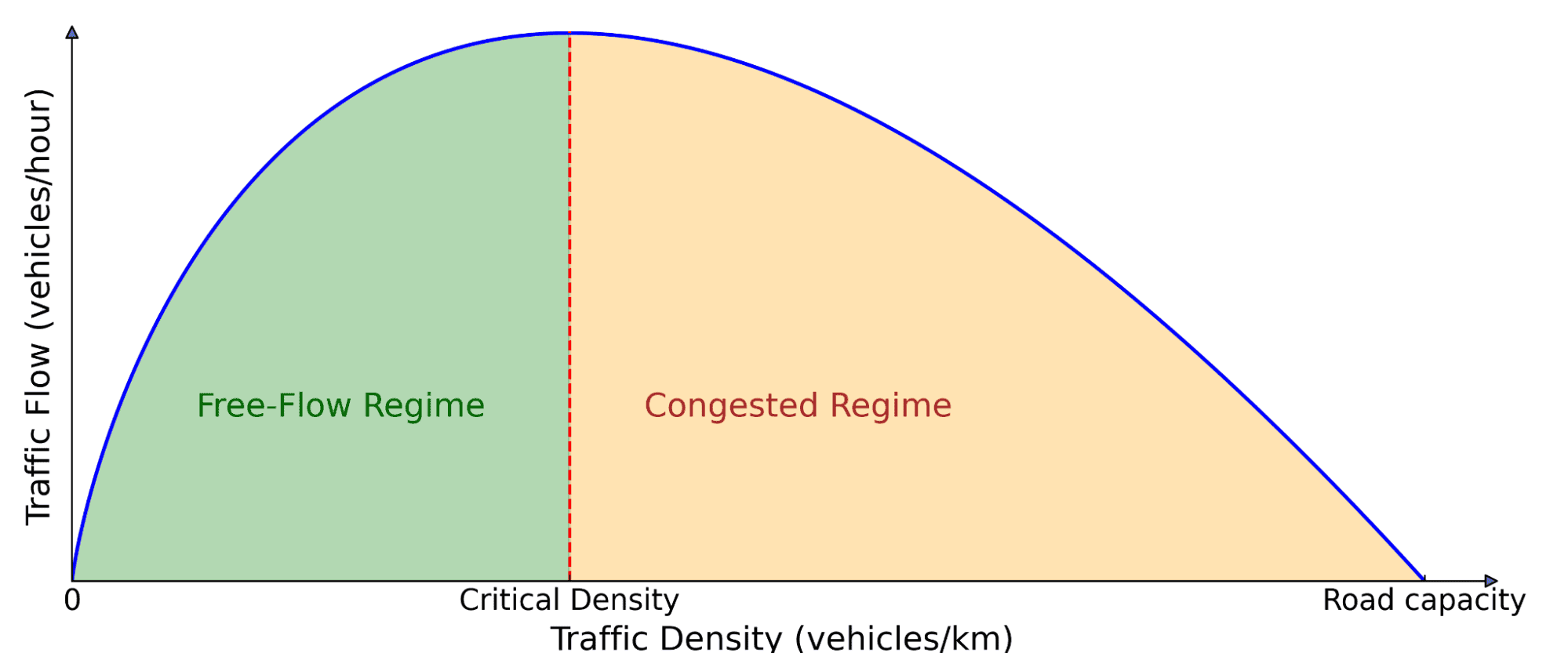

And this isn’t an remoted phenomenon! These waves are ubiquitous on busy roads when the visitors density exceeds a essential threshold. So how can we deal with this downside? Conventional approaches like ramp metering and variable pace limits try to handle visitors move, however they typically require expensive infrastructure and centralized coordination. A extra scalable strategy is to make use of AVs, which may dynamically alter their driving conduct in real-time. Nevertheless, merely inserting AVs amongst human drivers isn’t sufficient: they need to additionally drive in a better method that makes visitors higher for everybody, which is the place RL is available in.

Basic diagram of visitors move. The variety of vehicles on the street (density) impacts how a lot visitors is shifting ahead (move). At low density, including extra vehicles will increase move as a result of extra autos can move by. However past a essential threshold, vehicles begin blocking one another, resulting in congestion, the place including extra vehicles truly slows down general motion.

Reinforcement studying for wave-smoothing AVs

RL is a strong management strategy the place an agent learns to maximise a reward sign by interactions with an setting. The agent collects expertise by trial and error, learns from its errors, and improves over time. In our case, the setting is a mixed-autonomy visitors state of affairs, the place AVs study driving methods to dampen stop-and-go waves and scale back gas consumption for each themselves and close by human-driven autos.

Coaching these RL brokers requires quick simulations with lifelike visitors dynamics that may replicate freeway stop-and-go conduct. To attain this, we leveraged experimental information collected on Interstate 24 (I-24) close to Nashville, Tennessee, and used it to construct simulations the place autos replay freeway trajectories, creating unstable visitors that AVs driving behind them study to easy out.

Simulation replaying a freeway trajectory that displays a number of stop-and-go waves.

We designed the AVs with deployment in thoughts, guaranteeing that they’ll function utilizing solely fundamental sensor details about themselves and the car in entrance. The observations encompass the AV’s pace, the pace of the main car, and the house hole between them. Given these inputs, the RL agent then prescribes both an instantaneous acceleration or a desired pace for the AV. The important thing benefit of utilizing solely these native measurements is that the RL controllers could be deployed on most fashionable autos in a decentralized method, with out requiring further infrastructure.

Reward design

Essentially the most difficult half is designing a reward perform that, when maximized, aligns with the completely different aims that we want the AVs to realize:

- Wave smoothing: Scale back stop-and-go oscillations.

- Vitality effectivity: Decrease gas consumption for all autos, not simply AVs.

- Security: Guarantee cheap following distances and keep away from abrupt braking.

- Driving consolation: Keep away from aggressive accelerations and decelerations.

- Adherence to human driving norms: Guarantee a “regular” driving conduct that doesn’t make surrounding drivers uncomfortable.

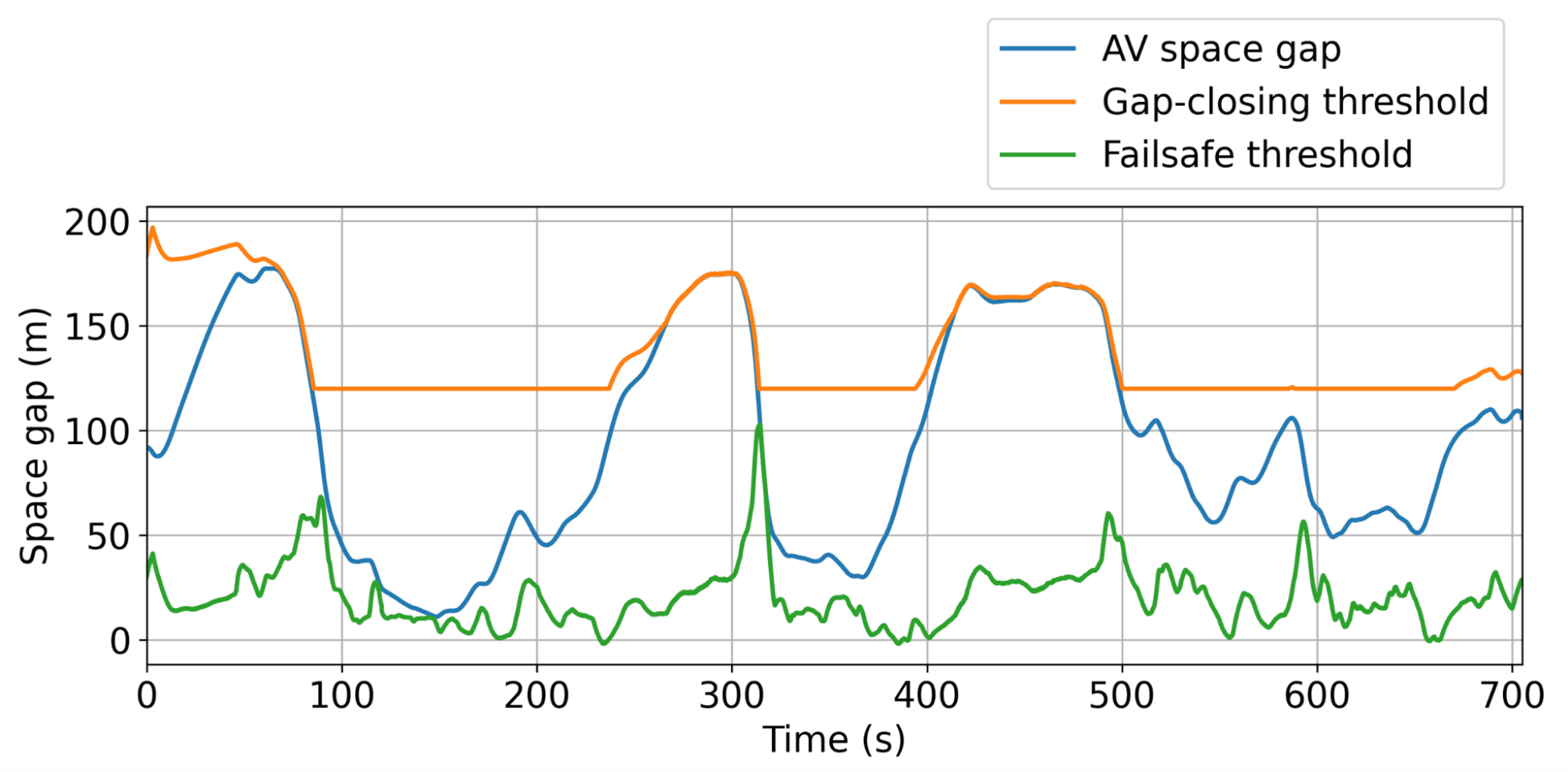

Balancing these aims collectively is troublesome, as appropriate coefficients for every time period should be discovered. As an illustration, if minimizing gas consumption dominates the reward, RL AVs study to return to a cease in the course of the freeway as a result of that’s power optimum. To forestall this, we launched dynamic minimal and most hole thresholds to make sure secure and cheap conduct whereas optimizing gas effectivity. We additionally penalized the gas consumption of human-driven autos behind the AV to discourage it from studying a egocentric conduct that optimizes power financial savings for the AV on the expense of surrounding visitors. General, we goal to strike a steadiness between power financial savings and having an inexpensive and secure driving conduct.

Simulation outcomes

Illustration of the dynamic minimal and most hole thresholds, inside which the AV can function freely to easy visitors as effectively as attainable.

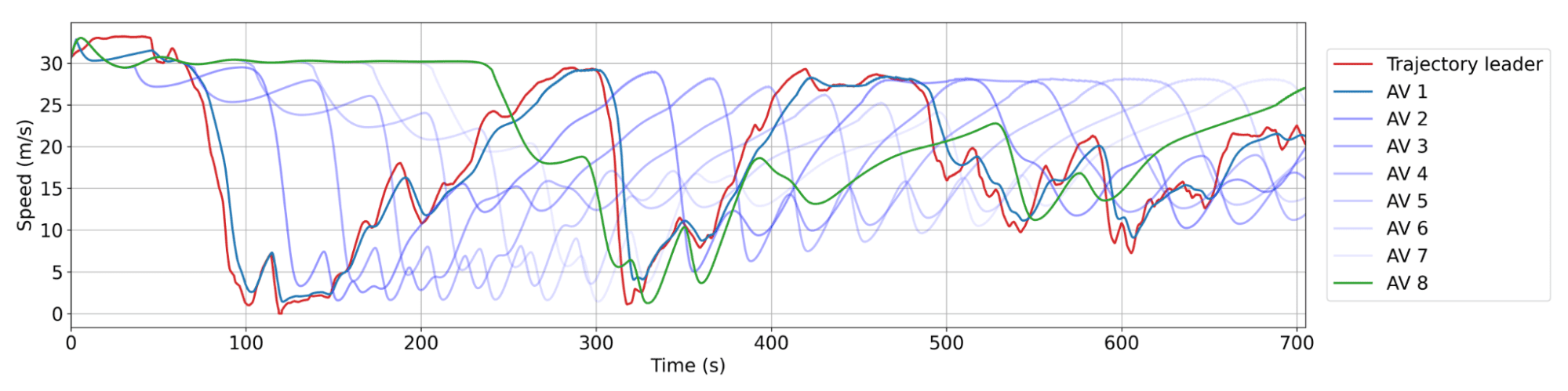

The everyday conduct discovered by the AVs is to take care of barely bigger gaps than human drivers, permitting them to soak up upcoming, probably abrupt, visitors slowdowns extra successfully. In simulation, this strategy resulted in important gas financial savings of as much as 20% throughout all street customers in probably the most congested eventualities, with fewer than 5% of AVs on the street. And these AVs don’t need to be particular autos! They’ll merely be normal client vehicles geared up with a sensible adaptive cruise management (ACC), which is what we examined at scale.

Smoothing conduct of RL AVs. Purple: a human trajectory from the dataset. Blue: successive AVs within the platoon, the place AV 1 is the closest behind the human trajectory. There may be usually between 20 and 25 human autos between AVs. Every AV doesn’t decelerate as a lot or speed up as quick as its chief, resulting in lowering wave amplitude over time and thus power financial savings.

100 AV area take a look at: deploying RL at scale

Our 100 vehicles parked at our operational middle throughout the experiment week.

Given the promising simulation outcomes, the pure subsequent step was to bridge the hole from simulation to the freeway. We took the skilled RL controllers and deployed them on 100 autos on the I-24 throughout peak visitors hours over a number of days. This huge-scale experiment, which we referred to as the MegaVanderTest, is the biggest mixed-autonomy traffic-smoothing experiment ever carried out.

Earlier than deploying RL controllers within the area, we skilled and evaluated them extensively in simulation and validated them on the {hardware}. General, the steps in the direction of deployment concerned:

- Coaching in data-driven simulations: We used freeway visitors information from I-24 to create a coaching setting with lifelike wave dynamics, then validate the skilled agent’s efficiency and robustness in quite a lot of new visitors eventualities.

- Deployment on {hardware}: After being validated in robotics software program, the skilled controller is uploaded onto the automobile and is ready to management the set pace of the car. We function by the car’s on-board cruise management, which acts as a lower-level security controller.

- Modular management framework: One key problem throughout the take a look at was not accessing the main car info sensors. To beat this, the RL controller was built-in right into a hierarchical system, the MegaController, which mixes a pace planner information that accounts for downstream visitors situations, with the RL controller as the ultimate determination maker.

- Validation on {hardware}: The RL brokers had been designed to function in an setting the place most autos had been human-driven, requiring strong insurance policies that adapt to unpredictable conduct. We confirm this by driving the RL-controlled autos on the street below cautious human supervision, making adjustments to the management primarily based on suggestions.

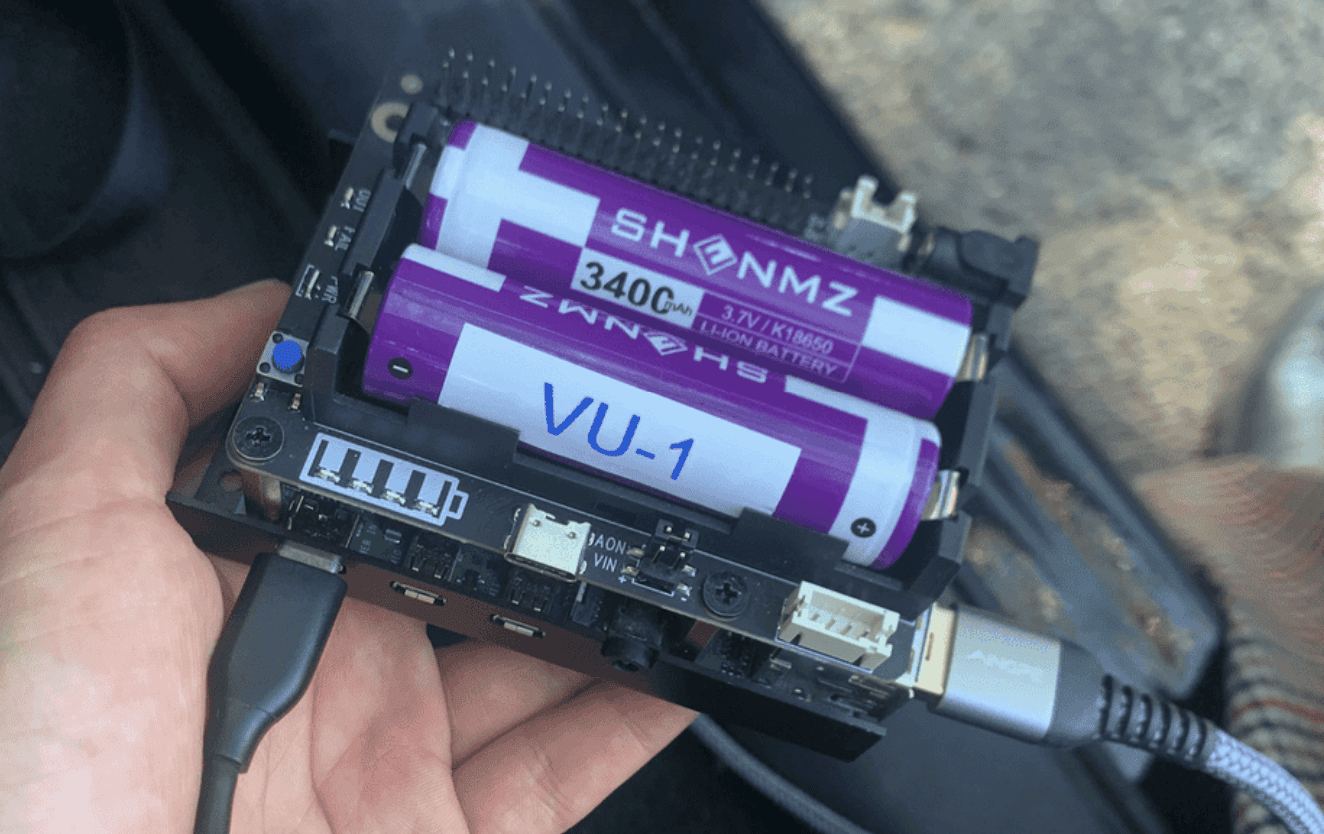

Every of the 100 vehicles is related to a Raspberry Pi, on which the RL controller (a small neural community) is deployed.

The RL controller immediately controls the onboard adaptive cruise management (ACC) system, setting its pace and desired following distance.

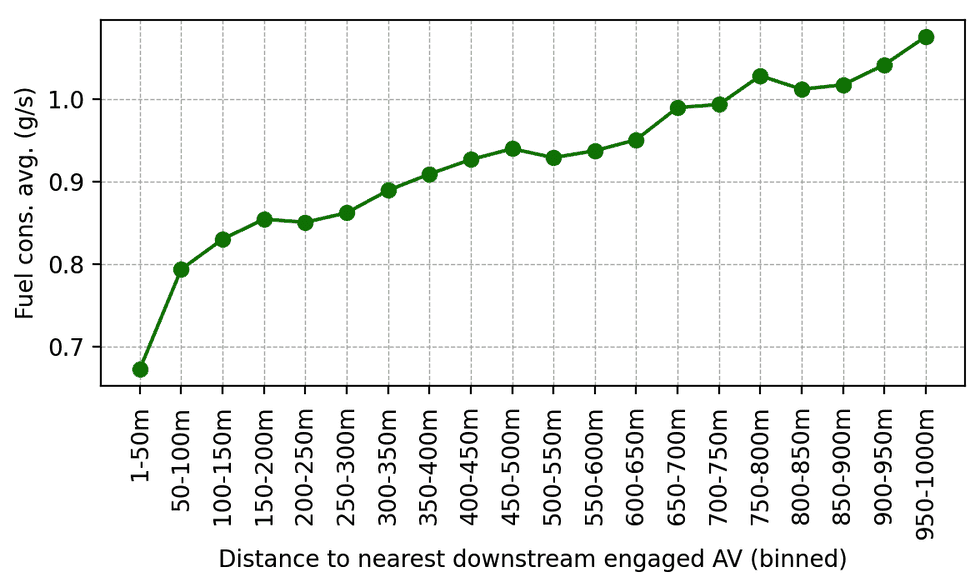

As soon as validated, the RL controllers had been deployed on 100 vehicles and pushed on I-24 throughout morning rush hour. Surrounding visitors was unaware of the experiment, guaranteeing unbiased driver conduct. Knowledge was collected throughout the experiment from dozens of overhead cameras positioned alongside the freeway, which led to the extraction of hundreds of thousands of particular person car trajectories by a pc imaginative and prescient pipeline. Metrics computed on these trajectories point out a pattern of lowered gas consumption round AVs, as anticipated from simulation outcomes and former smaller validation deployments. As an illustration, we will observe that the nearer persons are driving behind our AVs, the much less gas they seem to eat on common (which is calculated utilizing a calibrated power mannequin):

Common gas consumption as a perform of distance behind the closest engaged RL-controlled AV within the downstream visitors. As human drivers get additional away behind AVs, their common gas consumption will increase.

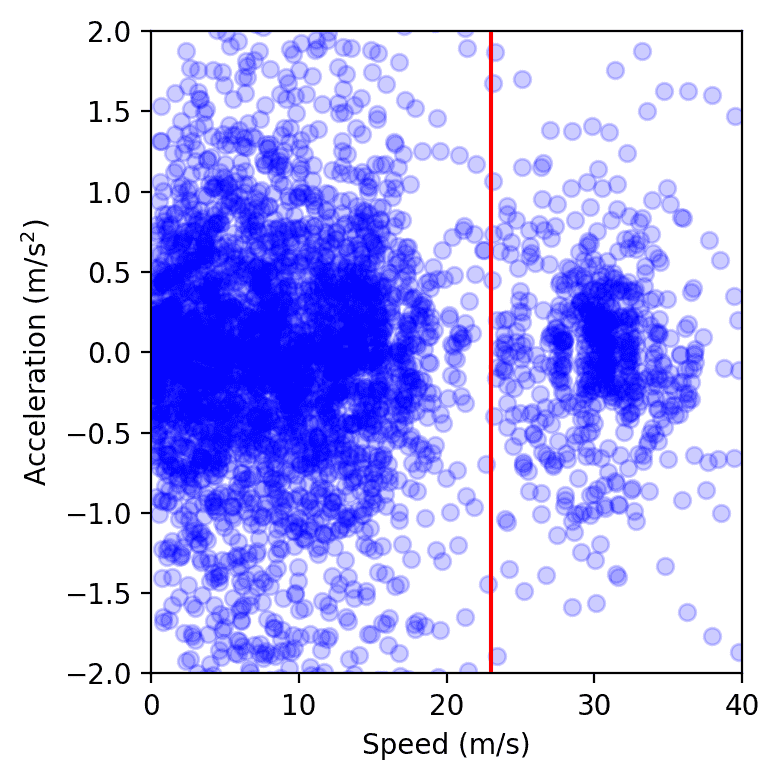

One other method to measure the influence is to measure the variance of the speeds and accelerations: the decrease the variance, the much less amplitude the waves ought to have, which is what we observe from the sphere take a look at information. General, though getting exact measurements from a considerable amount of digital camera video information is difficult, we observe a pattern of 15 to twenty% of power financial savings round our managed vehicles.

Knowledge factors from all autos on the freeway over a single day of the experiment, plotted in speed-acceleration house. The cluster to the left of the purple line represents congestion, whereas the one on the precise corresponds to free move. We observe that the congestion cluster is smaller when AVs are current, as measured by computing the realm of a delicate convex envelope or by becoming a Gaussian kernel.

Remaining ideas

The 100-car area operational take a look at was decentralized, with no specific cooperation or communication between AVs, reflective of present autonomy deployment, and bringing us one step nearer to smoother, extra energy-efficient highways. But, there’s nonetheless huge potential for enchancment. Scaling up simulations to be sooner and extra correct with higher human-driving fashions is essential for bridging the simulation-to-reality hole. Equipping AVs with further visitors information, whether or not by superior sensors or centralized planning, might additional enhance the efficiency of the controllers. As an illustration, whereas multi-agent RL is promising for bettering cooperative management methods, it stays an open query how enabling specific communication between AVs over 5G networks might additional enhance stability and additional mitigate stop-and-go waves. Crucially, our controllers combine seamlessly with present adaptive cruise management (ACC) techniques, making area deployment possible at scale. The extra autos geared up with sensible traffic-smoothing management, the less waves we’ll see on our roads, which means much less air pollution and gas financial savings for everybody!

Many contributors took half in making the MegaVanderTest occur! The total record is obtainable on the CIRCLES venture web page, together with extra particulars concerning the venture.

Learn extra: [paper]