Welcome to the primary installment of a collection of posts discussing the lately introduced Cloudera AI Inference service.

In the present day, Synthetic Intelligence (AI) and Machine Studying (ML) are extra essential than ever for organizations to show information right into a aggressive benefit. To unlock the total potential of AI, nonetheless, companies have to deploy fashions and AI purposes at scale, in real-time, and with low latency and excessive throughput. That is the place the Cloudera AI Inference service is available in. It’s a highly effective deployment atmosphere that lets you combine and deploy generative AI (GenAI) and predictive fashions into your manufacturing environments, incorporating Cloudera’s enterprise-grade safety, privateness, and information governance.

Over the following a number of weeks, we’ll discover the Cloudera AI Inference service in-depth, offering you with a complete introduction to its capabilities, advantages, and use instances.

On this collection, we’ll delve into matters equivalent to:

- A Cloudera AI Inference service structure deep dive

- Key options and advantages of the service, and the way it enhances Cloudera AI Workbench

- Service configuration and sizing of mannequin deployments based mostly on projected workloads

- implement a Retrieval-Augmented Technology (RAG) system utilizing the service

- Exploring completely different use instances for which the service is a good selection

Should you’re all in favour of unlocking the total potential of AI and ML in your group, keep tuned for our subsequent posts, the place we’ll dig deeper into the world of Cloudera AI Inference.

What’s the Cloudera AI Inference service?

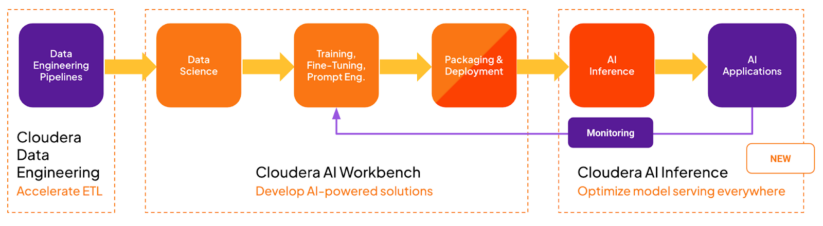

The Cloudera AI Inference service is a extremely scalable, safe, and high-performance deployment atmosphere for serving manufacturing AI fashions and associated purposes. The service is focused on the production-serving finish of the MLOPs/LLMOPs pipeline, as proven within the following diagram:

It enhances Cloudera AI Workbench (beforehand referred to as Cloudera Machine Studying Workspace), a deployment atmosphere that’s extra targeted on the exploration, improvement, and testing phases of the MLOPs workflow.

Why did we construct it?

The emergence of GenAI, sparked by the discharge of ChatGPT, has facilitated the broad availability of high-quality, open-source massive language fashions (LLMs). Companies like Hugging Face and the ONNX Mannequin Zoo made it straightforward to entry a variety of pre-trained fashions. This availability highlights the necessity for a sturdy service that permits clients to seamlessly combine and deploy pre-trained fashions from varied sources into manufacturing environments. To satisfy the wants of our clients, the service should be extremely:

- Safe – robust authentication and authorization, non-public, and protected

- Scalable – lots of of fashions and purposes with autoscaling functionality

- Dependable – minimalist, quick restoration from failures

- Manageable – straightforward to function, rolling updates

- Requirements compliant – undertake market-leading API requirements and mannequin frameworks

- Useful resource environment friendly – fine-grained useful resource controls and scale to zero

- Observable – monitor system and mannequin efficiency

- Performant – best-in-class latency, throughput, and concurrency

- Remoted – keep away from noisy neighbors to supply robust service SLAs

These and different concerns led us to create the Cloudera AI Inference service as a brand new, purpose-built service for internet hosting all manufacturing AI fashions and associated purposes. It’s superb for deploying always-on AI fashions and purposes that serve business-critical use instances.

Excessive-level structure

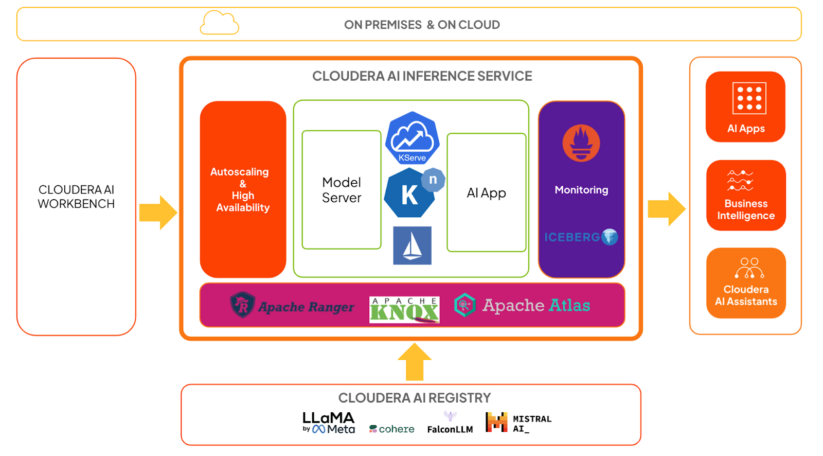

The diagram above reveals a high-level structure of Cloudera AI Inference service in context:

- KServe and Knative deal with mannequin and utility orchestration, respectively. Knative gives the framework for autoscaling, together with scale to zero.

- Mannequin servers are accountable for working fashions utilizing extremely optimized frameworks, which we are going to cowl intimately in a later put up.

- Istio gives the service mesh, and we benefit from its extension capabilities so as to add robust authentication and authorization with Apache Knox and Apache Ranger.

- Inference request and response payloads ship asynchronously to Apache Iceberg tables. Groups can analyze the info utilizing any BI software for mannequin monitoring and governance functions.

- System metrics, equivalent to inference latency and throughput, can be found as Prometheus metrics. Information groups can use any metrics dashboarding software to observe these.

- Customers can prepare and/or fine-tune fashions within the AI Workbench, and deploy them to the Cloudera AI Inference service for manufacturing use instances.

- Customers can deploy educated fashions, together with GenAI fashions or predictive deep studying fashions, on to the Cloudera AI Inference service.

- Fashions hosted on the Cloudera AI Inference service can simply combine with AI purposes, equivalent to chatbots, digital assistants, RAG pipelines, real-time and batch predictions, and extra, all with customary protocols just like the OpenAI API and the Open Inference Protocol.

- Customers can handle all of their fashions and purposes on the Cloudera AI Inference service with widespread CI/CD methods utilizing Cloudera service accounts, also referred to as machine customers.

- The service can effectively orchestrate lots of of fashions and purposes and scale every deployment to lots of of replicas dynamically, offered compute and networking sources can be found.

Conclusion

On this first put up, we launched the Cloudera AI Inference service, defined why we constructed it, and took a high-level tour of its structure. We additionally outlined lots of its capabilities. We are going to dive deeper into the structure in our subsequent put up, so please keep tuned.