Based in 2017, Logically is a frontrunner in utilizing AI to enhance shoppers’ intelligence functionality. By processing and analyzing huge quantities of knowledge from web sites, social platforms, and different digital sources, Logically identifies potential dangers, rising threats, and important narratives, organizing them into actionable insights that cybersecurity groups, product managers, and engagement leaders can act on swiftly and strategically.

GPU acceleration is a key element in Logically’s platform, enabling the detection of narratives to satisfy the necessities of extremely regulated entities. By utilizing GPUs, Logically has been in a position to considerably scale back coaching and inference occasions, permitting for information processing on the scale required to fight the unfold of false narratives on social media and the web extra broadly. The present shortage of GPU assets additionally implies that optimizing their utilization is vital for reaching optimum latency and the general success of AI tasks.

Logically noticed their inference occasions rising steadily as their information volumes grew, and due to this fact had a necessity to higher perceive and optimize their cluster utilization. Greater GPU clusters ran fashions quicker however have been underutilized. This commentary led to the concept of benefiting from the distribution energy of Spark to carry out GPU mannequin inference in essentially the most optimum manner and to find out whether or not an alternate configuration was required to unlock a cluster’s full potential.

By tuning concurrent duties per executor and pushing extra duties per GPU, Logically was in a position to scale back the runtime of their flagship advanced fashions by as much as 40%. This weblog explores how.

The important thing levers used have been:

1. Fractional GPU Allocation: Controlling the GPU allocation per job when Spark schedules GPU assets permits for splitting it evenly throughout the duties on every executor. This permits overlapping I/O and computation for optimum GPU utilization.

The default spark configuration is one job per GPU, as offered beneath. Which means that except loads of information is pushed into every job, the GPU will seemingly be underutilized.

By setting spark.job.useful resource.gpu.quantity to values beneath 1, akin to 0.5 or 0.25, Logically achieved a greater distribution of every GPU throughout duties. The most important enhancements have been seen by experimenting with this setting. By lowering the worth of this configuration, extra duties can run in parallel on every GPU, permitting the inference job to complete quicker.

Experimenting with this configuration is an effective preliminary step and sometimes has essentially the most affect with the least tweaking. Within the following configurations, we’ll go a bit deeper into how Spark works and the configurations we tweaked.

2. Concurrent Process Execution: Guaranteeing that the cluster runs a couple of concurrent job per executor allows higher parallelization.

In standalone mode, if spark.executor.cores just isn’t explicitly set, every executor will use all obtainable cores on the employee node, stopping a good distribution of GPU assets.

The spark.executor.cores setting will be set to correspond to the spark.job.useful resource.gpu.quantity setting. As an example, spark.executor.cores=2 permits two duties to run on every executor. Given a GPU useful resource splitting of spark.job.useful resource.gpu.quantity=0.5, these two concurrent duties would run on the identical GPU.

Logically achieved optimum outcomes by operating one executor per GPU and evenly distributing the cores among the many executors. As an example, a cluster with 24 cores and 4 GPUs would run with six cores (--conf spark.executor.cores=6) per executor. This controls the variety of duties that Spark places on an executor directly.

3. Coalesce: Merging current partitions right into a smaller quantity reduces the overhead of managing numerous partitions and permits for extra information to suit into every partition. The relevance of coalesce() to GPUs revolves round information distribution and optimization for environment friendly GPU utilization. GPUs excel at processing giant datasets on account of their extremely parallel structure, which may execute many operations concurrently. For environment friendly GPU utilization, we have to perceive the next:

- Bigger partitions of knowledge are sometimes higher as a result of GPUs can deal with huge parallel workloads. Bigger partitions additionally result in higher GPU reminiscence utilization, so long as they match into the obtainable GPU reminiscence. If this restrict is exceeded, you could run into OOMs.

- Below-utilized GPUs (on account of small partitions or small workloads, for easy reads, Spark goals for a partition dimension of 128MB) could result in inefficiencies, with many GPU cores remaining idle.

In these circumstances, coalesce() might help by lowering the variety of partitions, guaranteeing that every partition comprises extra information, which is usually preferable for GPU processing. Bigger information chunks per partition imply that the GPU will be higher utilized, leveraging its parallel cores to course of extra information directly.

Coalesce combines current partitions to create a smaller variety of partitions, which may enhance efficiency and useful resource utilization in sure situations. When potential, partitions are merged regionally inside an executor, avoiding a full shuffle of knowledge throughout the cluster.

It’s value noting that coalesce doesn’t assure balanced partitions, which can result in skewed information distribution. In case you realize that your information comprises skew, then repartition() is most well-liked, because it performs a full shuffle that redistributes the information evenly throughout partitions. If repartition() works higher on your use case, be sure to flip Adaprite Question Execution (AQE) off with the setting spark.conf.set("spark.databricks.optimizer.adaptive.enabled","false). AQE can dynamically coalesce partitions which can intrude with the optimum partition we are attempting to attain with this train.

By controlling the variety of partitions, the Logically workforce was in a position to push extra information into every partition. Setting the variety of partitions to a a number of of the variety of GPUs obtainable resulted in higher GPU utilization.

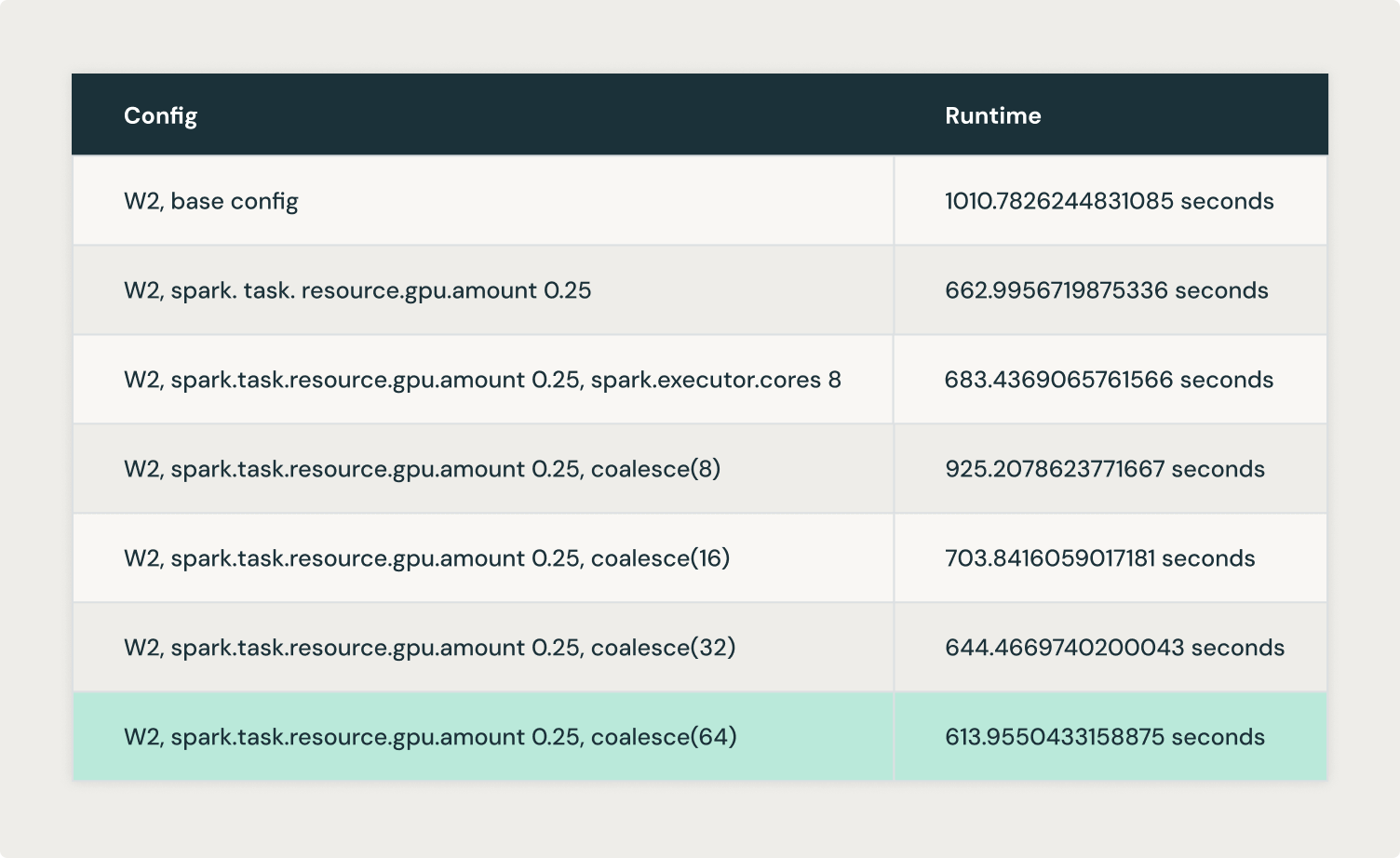

Logically experimented with coalesce(8), coalesce(16), coalesce(32) and coalesce(64) and achieved optimum outcomes with coalesce(64).

From the above experiments, we understood that there’s a stability between how huge or small the partitions needs to be by way of dimension to attain higher GPU utilization. So, we examined the maxPartitionBytes configuration, aiming to create greater partitions from the beginning as a substitute of getting to create them in a while with coalesce() or repartition().

maxPartitionBytes is a parameter that determines the most dimension of every partition in reminiscence when information is learn from a file. By default, this parameter is usually set to 128MB, however in our case, we set it to 512MB aiming for greater partitions. This prevents Spark from creating excessively giant partitions that would overwhelm the reminiscence of an executor or GPU. The thought is to have manageable partition sizes that match into obtainable reminiscence with out inflicting efficiency degradation on account of extreme disk spilling or reminiscence errors.

These experimentations have opened the door to additional optimizations throughout the Logically platform. This contains leveraging Ray to create distributed functions whereas benefiting from the breadth of the Databricks ecosystem, enhancing information processing and machine studying workflows. Ray might help maximize the parallelism of the GPU assets even additional, for instance by its built-in GPU auto scaling capabilities and GPU utilization monitoring. This represents a chance to extend worth from GPU acceleration, which is vital to Logically’s continued mission of defending establishments from the unfold of dangerous narratives.

For extra data: