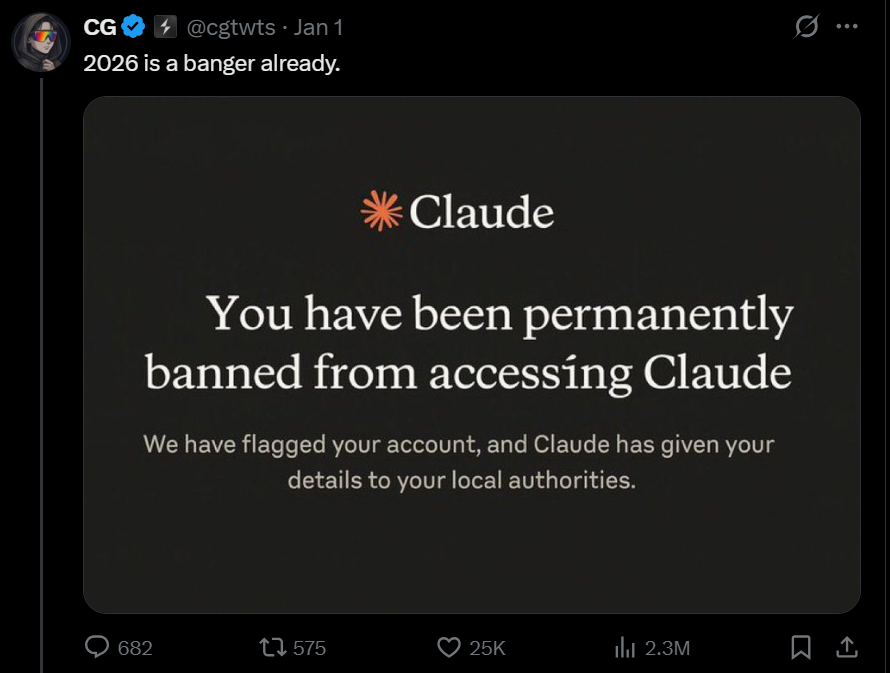

Anthropic has denied studies of banning authentic accounts after a viral put up on X claimed the creator of Claude had banned a person.

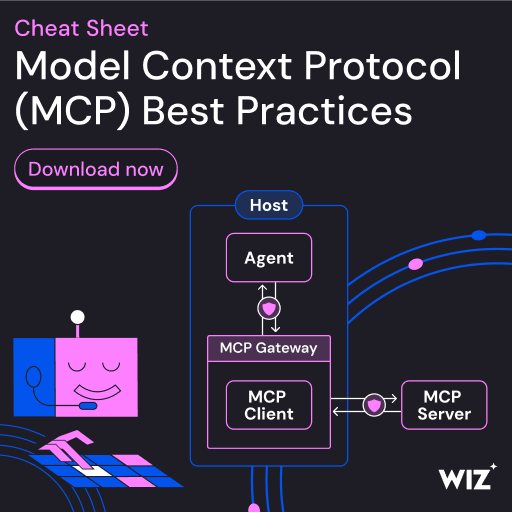

Claude Code is without doubt one of the most succesful AI coding brokers proper now, and it’s additionally extensively used in comparison with instruments like Gemini CLI or Codex.

With that reputation comes trolling and loads of faux screenshots that get shared as “proof” of bans.

Within the viral put up on X, the person shared a screenshot that claims Claude completely banned the account and shared particulars with the native authorities.

The message is designed to look scary, however Anthropic says it doesn’t match something Claude truly reveals customers.

In a press release shared with BleepingComputer, Anthropic mentioned the picture isn’t actual and that the corporate doesn’t use that language or show that form of message.

The corporate added that the screenshot seems to be a faux that “circulates each few months” and is inaccurate.

That doesn’t imply Claude customers can’t get restricted.

Anthropic, like different AI firms, enforces strict guidelines to forestall misuse of AI methods.

Accounts can face limitations in the event that they repeatedly violate insurance policies, together with makes an attempt to make use of AI brokers for unlawful actions, comparable to weapons-related requests.