Picture offered by the authors – generated utilizing Gemini.

Picture offered by the authors – generated utilizing Gemini.

For many people, synthetic intelligence (AI) has grow to be a part of on a regular basis life, and the speed at which we assign beforehand human roles to AI methods reveals no indicators of slowing down. AI methods are the essential elements of many applied sciences — e.g., self-driving vehicles, good city planning, digital assistants — throughout a rising variety of domains. On the core of many of those applied sciences are autonomous brokers — methods designed to behave on behalf of people and make selections with out direct supervision. In an effort to act successfully in the true world, these brokers have to be able to finishing up a variety of duties regardless of probably unpredictable environmental situations, which frequently requires some type of machine studying (ML) for reaching adaptive behaviour.

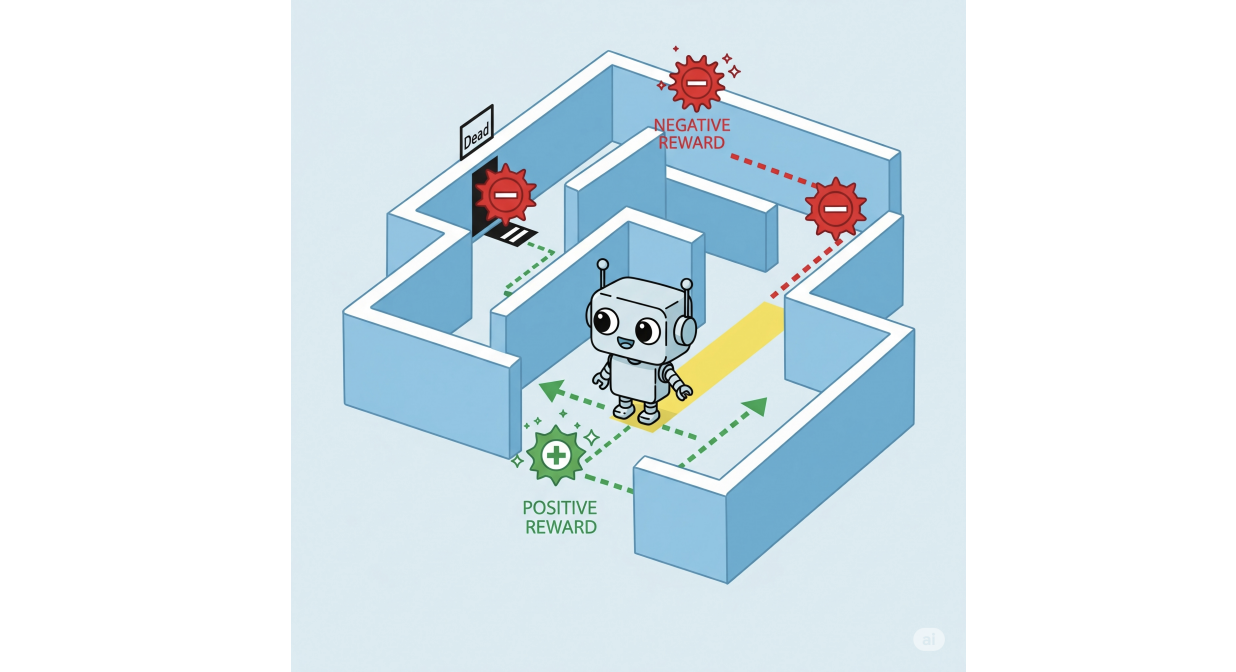

Reinforcement studying (RL) [6] stands out as a strong ML method for coaching brokers to realize optimum behaviour in stochastic environments. RL brokers be taught by interacting with their atmosphere: for each motion they take, they obtain context-specific rewards or penalties. Over time, they be taught behaviour that maximizes the anticipated rewards all through their runtime.

Picture offered by the authors – generated utilizing Gemini.

Picture offered by the authors – generated utilizing Gemini.

RL brokers can grasp all kinds of complicated duties, from successful video video games to controlling cyber-physical methods akin to self-driving vehicles, typically surpassing what professional people are able to. This optimum, environment friendly behaviour, nonetheless, if left solely unconstrained, could develop into off-putting and even harmful to the people it impacts. This motivates the substantial analysis effort in secure RL, the place specialised strategies are developed to make sure that RL brokers meet particular security necessities. These necessities are sometimes expressed in formal languages like linear temporal logic (LTL), which extends classical (true/false) logic with temporal operators, permitting us to specify situations like “one thing that should all the time maintain”, or “one thing that should ultimately happen”. By combining the adaptability of ML with the precision of logic, researchers have developed highly effective strategies for coaching brokers to behave each successfully and safely.

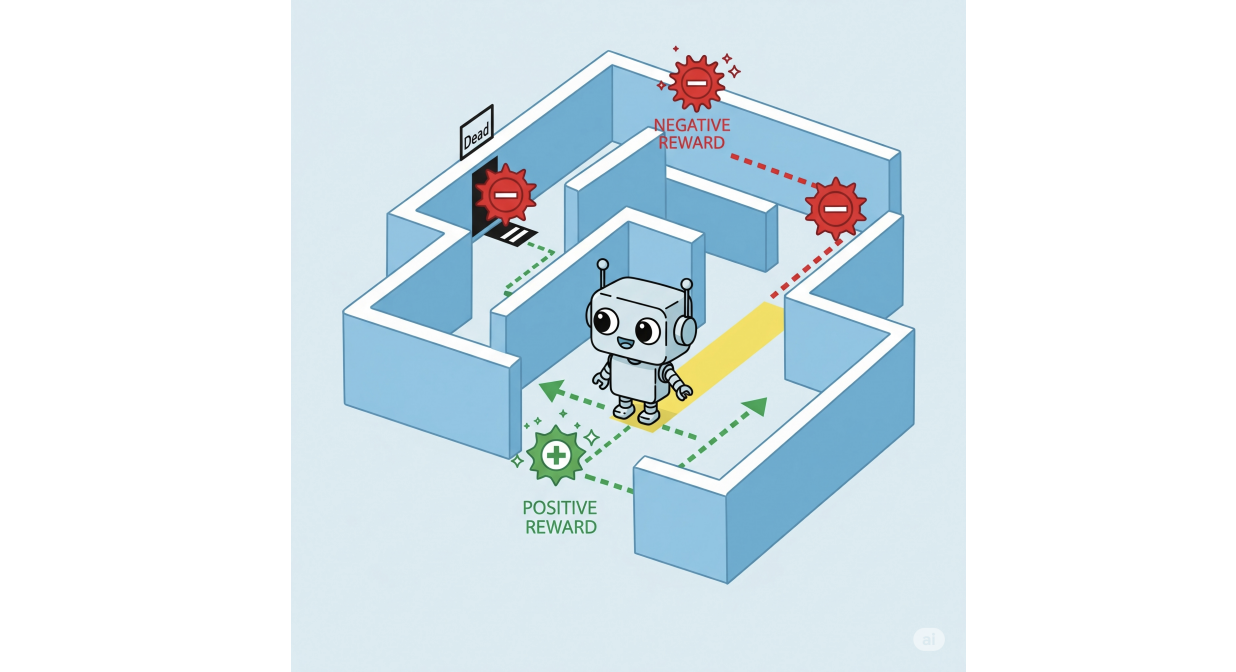

Nevertheless, security isn’t all the pieces. Certainly, as RL-based brokers are more and more given roles that both change or intently work together with people, a brand new problem arises: guaranteeing their conduct can also be compliant with the social, authorized and moral norms that construction human society, which frequently transcend easy constraints guaranteeing security. For instance, a self-driving automotive would possibly completely comply with security constraints (e.g. avoiding collisions), but nonetheless undertake behaviors that, whereas technically secure, violate social norms, showing weird or impolite on the street, which could trigger different (human) drivers to react in unsafe methods.

Norms are sometimes expressed as obligations (“you should do it”), permissions (“you’re permitted to do it”) and prohibitions (“you’re forbidden from doing it”), which aren’t statements that may be true or false, like classical logic formulation. As a substitute, they’re deontic ideas: they describe what is correct, flawed, or permissible — perfect or acceptable behaviour, as a substitute of what’s really the case. This nuance introduces a number of troublesome dynamics to reasoning about norms, which many logics (akin to LTL) wrestle to deal with. Even every-day normative methods like driving rules can function such issues; whereas some norms might be quite simple (e.g., by no means exceed 50 kph inside metropolis limits), others might be extra complicated, as in:

- All the time preserve 10 meters between your car and the autos in entrance of and behind you.

- If there are lower than 10 meters between you and the car behind you, it’s best to decelerate to place more room between your self and the car in entrance of you.

(2) is an instance of a contrary-to-duty obligation (CTD), an obligation you should comply with particularly in a state of affairs the place one other main obligation (1) has already been violated to, e.g., compensate or cut back injury. Though studied extensively within the fields of normative reasoning and deontic logic, such norms might be problematic for a lot of fundamental secure RL strategies based mostly on implementing LTL constraints, as was mentioned in [4].

Nevertheless, there are approaches for secure RL that present extra potential. One notable instance is the Restraining Bolt method, launched by De Giacomo et al. [2]. Named after a tool used within the Star Wars universe to curb the conduct of droids, this technique influences an agent’s actions to align with specified guidelines whereas nonetheless permitting it to pursue its objectives. That’s, the restraining bolt modifies the conduct an RL agent learns in order that it additionally respects a set of specs. These specs, expressed in a variant of LTL (LTLf [3]), are every paired with its personal reward. The central concept is straightforward however highly effective: together with the rewards the agent receives whereas exploring the atmosphere, we add an extra reward every time its actions fulfill the corresponding specification, nudging it to behave in ways in which align with particular person security necessities. The task of particular rewards to particular person specs permits us to mannequin extra sophisticated dynamics like, e.g., CTD obligations, by assigning one reward for obeying the first obligation, and a special reward for obeying the CTD obligation.

Nonetheless, points with modeling norms persist; for instance, many (if not most) norms are conditional. Contemplate the duty stating “if pedestrians are current at a pedestrian crossing, THEN the close by autos should cease”. If an agent had been rewarded each time this rule was glad, it will additionally obtain rewards in conditions the place the norm will not be really in drive. It is because, in logic, an implication holds additionally when the antecedent (“pedestrians are current”) is fake. In consequence, the agent is rewarded every time pedestrians are usually not round, and would possibly be taught to delay its runtime with a view to accumulate these rewards for successfully doing nothing, as a substitute of effectively pursuing its meant process (e.g., reaching a vacation spot). In [5] we confirmed that there are situations the place an agent will both ignore the norms, or be taught this “procrastination” conduct, irrespective of which rewards we select. In consequence, we launched Normative Restraining Bolts (NRBs), a step ahead towards implementing norms in RL brokers. In contrast to the unique Restraining Bolt, which inspired compliance by offering extra rewards, the normative model as a substitute punishes norm violations. This design is impressed by the Andersonian view of deontic logic [1], which treats obligations as guidelines whose violation essentially triggers a sanction. Thus, the framework not depends on reinforcing acceptable conduct, however as a substitute enforces norms by guaranteeing that violations carry tangible penalties. Whereas efficient for managing intricate normative dynamics like conditional obligations, contrary-to-duties, and exceptions to norms, NRBs depend on trial-and-error reward tuning to implement norm adherence, and due to this fact might be unwieldy, particularly when making an attempt to resolve conflicts between norms. Furthermore, they require retraining to accommodate norm updates, and don’t lend themselves to ensures that optimum insurance policies decrease norm violations.

Our contribution

Constructing on NRBs, we introduce Ordered Normative Restraining Bolts (ONRBs), a framework for guiding reinforcement studying brokers to adjust to social, authorized, and moral norms whereas addressing the restrictions of NRBs. On this strategy, every norm is handled as an goal in a multi-objective reinforcement studying (MORL) downside. Reformulating the issue on this means permits us to:

- Show that when norms don’t battle, an agent who learns optimum behaviour will decrease norm violations over time.

- Specific relationships between norms by way of a rating system describing which norm ought to be prioritized when a battle happens.

- Use MORL strategies to algorithmically decide the required magnitude of the punishments we assign such that it’s guarantied that as long as an agent learns optimum behaviour, norms can be violated as little as attainable, prioritizing the norms with the best rank.

- Accommodate adjustments in our normative methods by “deactivating” or “reactivating” particular norms.

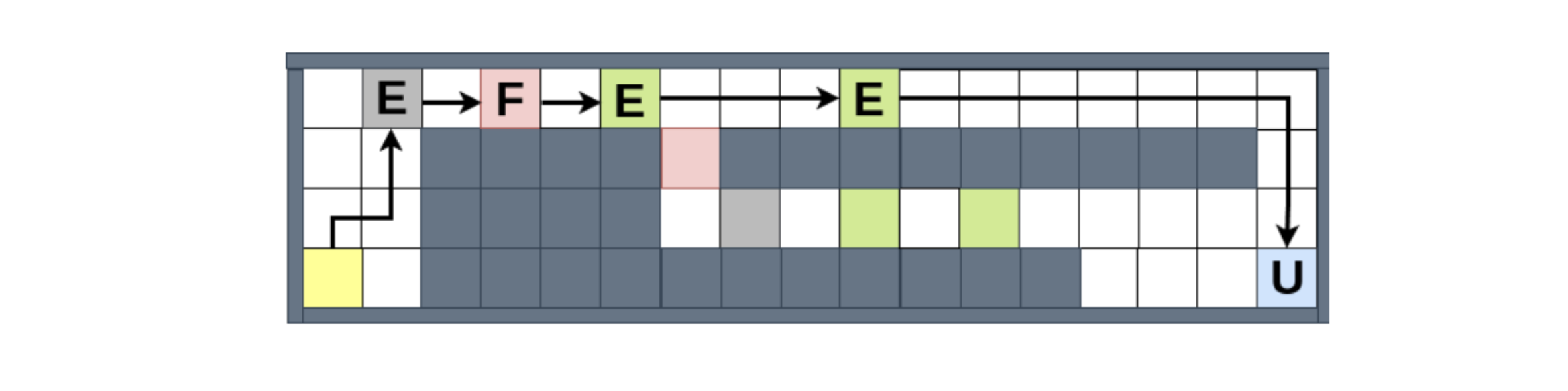

We examined our framework in a grid-world atmosphere impressed by technique video games, the place an agent learns to gather assets and ship them to designated areas. This setup permits us to exhibit the framework’s means to deal with the complicated normative situations we famous above, together with direct prioritization of conflicting norms and norm updates. As an example, the determine under

shows how the agent handles norm conflicts, when it’s each obligated to (1) keep away from the harmful (pink) areas, and (2) attain the market (blue) space by a sure deadline, supposing that the second norm takes precedence. We are able to see that it chooses to violate (1) as soon as, as a result of in any other case it is going to be caught at the start of the map, unable to meet (2). However, when given the chance to violate (1) as soon as extra, it chooses the compliant path, regardless that the violating path would enable it to gather extra assets, and due to this fact extra rewards from the atmosphere.

In abstract, by combining RL with logic, we are able to construct AI brokers that don’t simply work, they work proper.

This work received a distinguished paper award at IJCAI 2025. Learn the paper in full: Combining MORL with restraining bolts to be taught normative behaviour, Emery A. Neufeld, Agata Ciabattoni and Radu Florin Tulcan.

Acknowledgements

This analysis was funded by the Vienna Science and Know-how Fund (WWTF) challenge ICT22-023 and the Austrian Science Fund (FWF) 10.55776/COE12 Cluster of Excellence Bilateral AI.

References

[1] Alan Ross Anderson. A discount of deontic logic to alethic modal logic. Thoughts, 67(265):100–103, 1958.

[2] Giuseppe De Giacomo, Luca Iocchi, Marco Favorito, and Fabio Patrizi. Foundations for restraining bolts: Reinforcement studying with LTLf/LDLf restraining specs. In Proceedings of the worldwide convention on automated planning and scheduling, quantity 29, pages 128–136, 2019.

[3] Giuseppe De Giacomo and Moshe Y Vardi. Linear temporal logic and linear dynamic logic on finite traces. In IJCAI, quantity 13, pages 854–860, 2013.

[4] Emery Neufeld, Ezio Bartocci, and Agata Ciabattoni. On normative reinforcement studying by way of secure reinforcement studying. In PRIMA 2022, 2022.

[5] Emery A Neufeld, Agata Ciabattoni, and Radu Florin Tulcan. Norm compliance in reinforcement studying brokers by way of restraining bolts. In Authorized Information and Data Programs JURIX 2024, pages 119–130. IOS Press, 2024.

[6] Richard S. Sutton and Andrew G. Barto. Reinforcement studying – an introduction. Adaptive computation and machine studying. MIT Press, 1998.

Agata Ciabattoni

is a Professor at TU Wien.

Emery Neufeld

is a postdoctoral researcher at TU Wien.