The phrase “observe makes good” is often reserved for people, but it surely’s additionally an ideal maxim for robots newly deployed in unfamiliar environments.

Image a robotic arriving in a warehouse. It comes packaged with the talents it was skilled on, like putting an object, and now it wants to select gadgets from a shelf it’s not conversant in. At first, the machine struggles with this, because it must get acquainted with its new environment. To enhance, the robotic might want to perceive which abilities inside an general activity it wants enchancment on, then specialize (or parameterize) that motion.

A human onsite might program the robotic to optimize its efficiency, however researchers from MIT’s Pc Science and Synthetic Intelligence Laboratory (CSAIL) and The AI Institute have developed a more practical various. Offered on the Robotics: Science and Techniques Convention final month, their “Estimate, Extrapolate, and Situate” (EES) algorithm allows these machines to observe on their very own, doubtlessly serving to them enhance at helpful duties in factories, households, and hospitals.

Sizing up the scenario

To assist robots get higher at actions like sweeping flooring, EES works with a imaginative and prescient system that locates and tracks the machine’s environment. Then, the algorithm estimates how reliably the robotic executes an motion (like sweeping) and whether or not it could be worthwhile to observe extra. EES forecasts how properly the robotic might carry out the general activity if it refines that specific ability, and eventually, it practices. The imaginative and prescient system subsequently checks whether or not that ability was completed accurately after every try.

EES might turn out to be useful in locations like a hospital, manufacturing facility, home, or espresso store. For instance, in the event you needed a robotic to wash up your lounge, it could need assistance practising abilities like sweeping. In accordance with Nishanth Kumar SM ’24 and his colleagues, although, EES might assist that robotic enhance with out human intervention, utilizing only some observe trials.

“Going into this undertaking, we puzzled if this specialization could be potential in an affordable quantity of samples on an actual robotic,” says Kumar, co-lead writer of a paper describing the work, PhD pupil in electrical engineering and pc science, and a CSAIL affiliate. “Now, we now have an algorithm that allows robots to get meaningfully higher at particular abilities in an affordable period of time with tens or lots of of information factors, an improve from the hundreds or hundreds of thousands of samples that an ordinary reinforcement studying algorithm requires.”

See Spot sweep

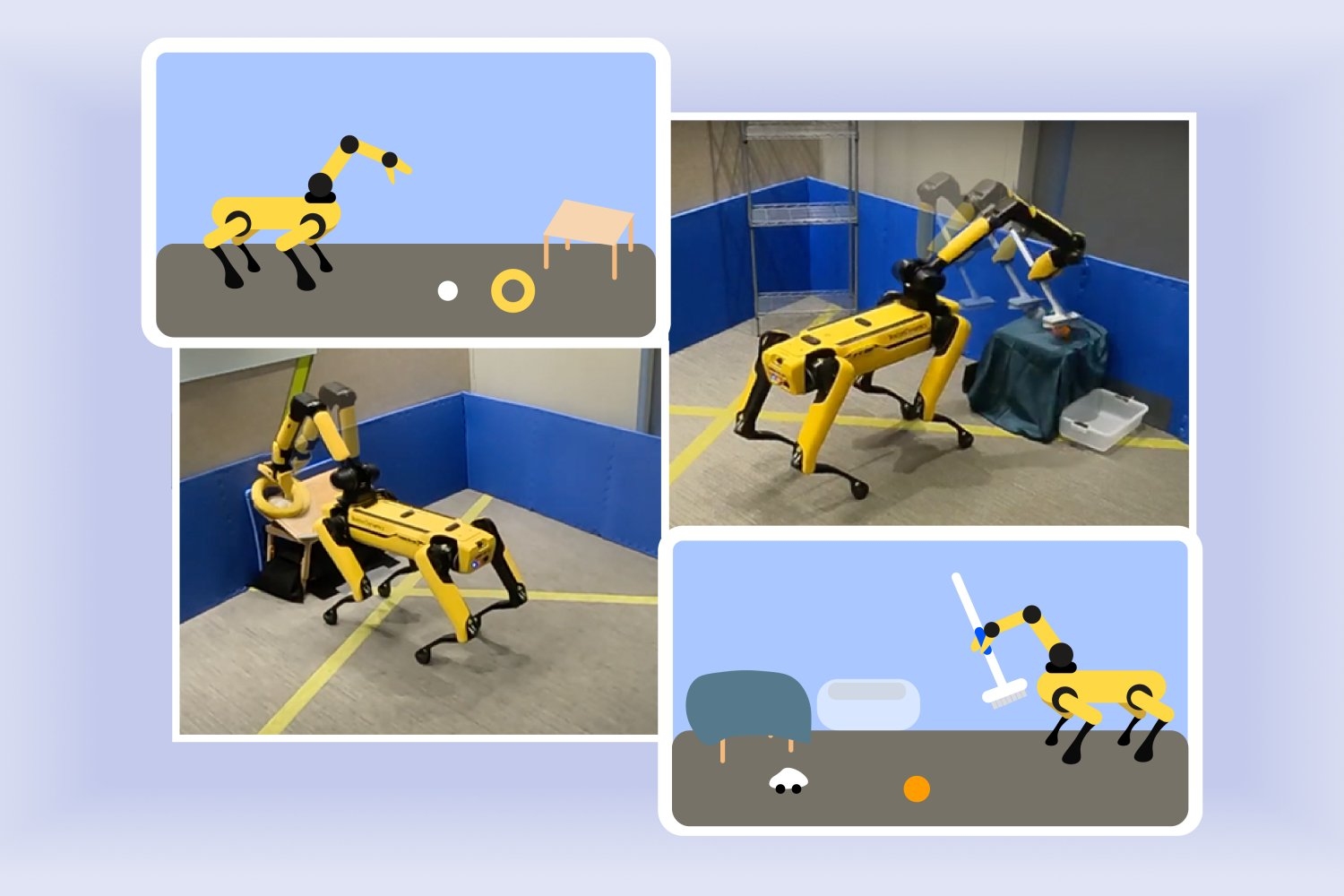

EES’s knack for environment friendly studying was evident when applied on Boston Dynamics’ Spot quadruped throughout analysis trials at The AI Institute. The robotic, which has an arm hooked up to its again, accomplished manipulation duties after practising for a couple of hours. In a single demonstration, the robotic realized the right way to securely place a ball and ring on a slanted desk in roughly three hours. In one other, the algorithm guided the machine to enhance at sweeping toys right into a bin inside about two hours. Each outcomes seem like an improve from earlier frameworks, which might have possible taken greater than 10 hours per activity.

“We aimed to have the robotic gather its personal expertise so it will probably higher select which methods will work properly in its deployment,” says co-lead writer Tom Silver SM ’20, PhD ’24, {an electrical} engineering and pc science (EECS) alumnus and CSAIL affiliate who’s now an assistant professor at Princeton College. “By specializing in what the robotic is aware of, we sought to reply a key query: Within the library of abilities that the robotic has, which is the one that will be most helpful to observe proper now?”

EES might ultimately assist streamline autonomous observe for robots in new deployment environments, however for now, it comes with a couple of limitations. For starters, they used tables that had been low to the bottom, which made it simpler for the robotic to see its objects. Kumar and Silver additionally 3D printed an attachable deal with that made the comb simpler for Spot to seize. The robotic didn’t detect some gadgets and recognized objects within the fallacious locations, so the researchers counted these errors as failures.

Giving robots homework

The researchers notice that the observe speeds from the bodily experiments may very well be accelerated additional with the assistance of a simulator. As an alternative of bodily working at every ability autonomously, the robotic might ultimately mix actual and digital observe. They hope to make their system quicker with much less latency, engineering EES to beat the imaging delays the researchers skilled. Sooner or later, they might examine an algorithm that causes over sequences of observe makes an attempt as an alternative of planning which abilities to refine.

“Enabling robots to be taught on their very own is each extremely helpful and intensely difficult,” says Danfei Xu, an assistant professor within the College of Interactive Computing at Georgia Tech and a analysis scientist at NVIDIA AI, who was not concerned with this work. “Sooner or later, house robots can be bought to all kinds of households and anticipated to carry out a variety of duties. We will not probably program every part they should know beforehand, so it’s important that they will be taught on the job. Nonetheless, letting robots free to discover and be taught with out steering could be very gradual and would possibly result in unintended penalties. The analysis by Silver and his colleagues introduces an algorithm that permits robots to observe their abilities autonomously in a structured method. This can be a huge step in the direction of creating house robots that may repeatedly evolve and enhance on their very own.”

Silver and Kumar’s co-authors are The AI Institute researchers Stephen Proulx and Jennifer Barry, plus 4 CSAIL members: Northeastern College PhD pupil and visiting researcher Linfeng Zhao, MIT EECS PhD pupil Willie McClinton, and MIT EECS professors Leslie Pack Kaelbling and Tomás Lozano-Pérez. Their work was supported, partly, by The AI Institute, the U.S. Nationwide Science Basis, the U.S. Air Drive Workplace of Scientific Analysis, the U.S. Workplace of Naval Analysis, the U.S. Military Analysis Workplace, and MIT Quest for Intelligence, with high-performance computing assets from the MIT SuperCloud and Lincoln Laboratory Supercomputing Heart.